Accurate evaluation of a golfer’s handicap is central to equitable competition, performance benchmarking, and targeted skill growth. Handicaps synthesize diverse influences-intrinsic player ability, course difficulty, environmental conditions, and stochastic variability in shot outcomes-into a single index intended to represent expected performance. Despite widespread adoption of standardized systems, persistent challenges remain in ensuring that handicap values faithfully reflect current ability across different competitive contexts and over time. These challenges motivate a rigorous analytical framework capable of quantifying sources of variance, correcting systematic biases, and supporting robust inference from observational scoring data.

This article develops a principled,data-driven framework for handicap evaluation that integrates course rating and slope metrics with probabilistic models of player performance. The framework treats score outcomes as realizations of hierarchical stochastic processes, permitting explicit decomposition of within-player variability (consistency, fatigue, form fluctuations) and between-player differences (skill latent traits). Incorporation of contextual covariates-such as course characteristics, whether, tee placement, and tournament format-enables adjustments that reduce confounding and improve comparability across rounds and venues. Emphasis is placed on model identifiability, treatment of censored or sparse score histories, and estimation strategies that balance adaptivity to recent form with stability derived from longer-term performance.

Evaluation and validation are addressed through a suite of metrics tailored to handicapping objectives: predictive accuracy (forecasting future scores), fairness (expected outcome parity in matched competitions), and responsiveness (timeliness of handicap updates following true changes in ability). The framework is operationalized with recommended computational approaches, including Bayesian hierarchical models, shrinkage estimators, and bootstrapped calibration procedures, along with diagnostic tools for detecting systematic drift or manipulation. Practical implications for players, coaches, and handicap administrators are discussed, highlighting how improved handicap estimates can inform course selection, match pairing, and individualized practice prioritization.

By formalizing the statistical foundations of handicap calculation and proposing transparent, testable methods for adjustment and validation, the proposed framework aims to enhance the credibility and utility of handicaps as measures of golfing ability. Subsequent sections detail the mathematical formulation, data requirements, estimation algorithms, empirical illustrations using representative scoring datasets, and guidelines for implementation within existing handicap systems.

conceptual Foundations and Evaluation Objectives for a Robust Handicap Framework

A sound methodology rests on clearly articulated theoretical premises that reconcile individual performance variation with course-specific difficulty. Core principles include statistical fairness (the handicap should be an unbiased estimator of play potential), representativeness (scores used must reflect the golfer’s typical ability), and temporal stability (the system must respond to genuine change in form while resisting transient noise). These tenets guide model selection, dictate permissible data transformations, and set the boundary conditions for acceptable correction factors such as weather or temporary course conditions.

Evaluation objectives translate theory into measurable goals.Primary aims are:

- Predictive validity – minimize error between expected and observed performance;

- Inter-course comparability – ensure a handicap equates to similar expected scores across different venues;

- equity and accessibility – maintain simplicity so players can understand and trust outputs;

- Robustness to manipulation – reduce incentives for strategic score submission.

Each objective carries trade-offs (e.g.,complexity vs.clarity) that must be explicitly balanced in the system specification.

Operationalizing the framework requires a concise set of metrics and validated data sources. typical indicators include score variance, recent-form weighting, course rating/slope adjustments, and outlier frequency. The following table maps select metrics to thier evaluative role, illustrating how quantitative diagnostics inform governance decisions:

| Metric | Purpose | Target |

|---|---|---|

| Score SD | Assess within-player variability | Lower variance → stable handicap |

| Cross-course bias | Measure comparability | <0.5 strokes mean bias |

| Recent-form weight | Responsiveness to improvement/decline | 20-40% on last 8 rounds |

Governance and continuous validation close the design loop. regular calibration cycles (e.g.,quarterly),pre-specified outlier-handling rules,and public reporting of performance metrics create accountability and enable iterative refinement. Methodologically, the framework should support both frequentist and Bayesian diagnostics (confidence intervals for handicaps, posterior predictive checks) and define explicit thresholds for manual review. Embedding these processes preserves the system’s integrity, aligns operational practice with stated objectives, and ensures that revisions are evidence-driven rather than ad hoc.

Data Requirements and Preprocessing Protocols for Accurate Performance Measurement

Robust measurement of player performance begins with a well-specified minimum dataset and explicit provenance. Essential records must capture both the round-level context and the granular scoring elements: **player identifier, course identifier, tee box, date/time, hole-by-hole scores, gross score, course rating, slope rating, and weather/conditions metadata**. Supporting auxiliary data-club/equipment changes, caddie notes, and GPS-derived shot traces-improves signal resolution for advanced models but should be flagged as optional. all ingested data must be accompanied by a documented source, collection method, and a Data Management Plan (DMP) that specifies format constraints, access rights, and retention policy in the spirit of open data best practices.

Preprocessing must enforce deterministic, auditable transformations so that handicap differentials and indices are reproducible. Core steps include: **validation of range and type, normalization of tee and course identifiers, adjustment to a common rating/slope baseline, and calculation of round differentials** using established formulas. Automate conversion rules for deprecated course ratings or multi-tee configurations and maintain a change log for any manual corrections. When computing performance summaries, apply time-aware weighting (e.g., recency windows) and preserve raw inputs so downstream recalculation under alternative algorithms is absolutely possible.

Missing and anomalous entries are inevitable in practice; therefore, explicit strategies for imputation and outlier control are required to avoid biasing handicap estimates. Small gaps in hole-level data can be imputed using conditional median or player-specific expectation models, while entire-round omissions should be treated as non-contributory unless validated. For anomaly detection and mitigation,implement a layered approach that combines statistical and domain-aware filters:

- Statistical thresholds (z-score,IQR) to flag extreme differentials

- Temporal consistency checks to identify abrupt unexplained jumps in performance

- Domain rules (e.g., impossible hole scores or mismatched par totals) for deterministic rejection

Quality assurance and reproducibility are enforced through metadata, versioning, and controlled release mechanisms aligned with recognized data governance practices. Every processed dataset should include a metadata manifest (schema version, preprocessing pipeline id, parameter values) and be stored with immutable provenance tags. The following table summarizes a compact schema for ingestion and provenance tracking (WordPress table styling applied):

| Field | Type | Example |

|---|---|---|

| RoundDate | ISO8601 | 2025-07-12 |

| CourseID | String | GREENHLS_01 |

| CourseRating/Slope | Numeric | 72.4 / 128 |

| HoleScores | Array (18) | [4,5,3,…] |

Advanced Statistical Modeling Techniques for Handicap Estimation and Uncertainty Quantification

Contemporary handicap estimation treats individual performance as a stochastic process governed by latent skill and contextual course factors.Hierarchical Bayesian models provide a coherent framework to partition variance into **player ability**, **course difficulty**, and **round-to-round temporal variation**, allowing pooling across players and courses to stabilize estimates for limited data cases. Incorporating random effects for tees, slope/rating adjustments, and shot-level covariates yields more granular inference: for example, modeling residual heteroscedasticity by tee and hole can reveal that short-game variance dominates for certain golfers, while driving inconsistency explains most dispersion for others.

Robust uncertainty quantification is essential when converting model output into practical handicaps and playing decisions. The following techniques are especially valuable for capturing both estimation and predictive uncertainty:

- Posterior predictive distributions: provide full probabilistic forecasts of future rounds and allow direct computation of credible intervals for handicap indices.

- Bootstrap and resampling: nonparametric alternatives for frequentist confidence intervals and for validating model stability under data perturbation.

- Gaussian processes and state-space models: capture smooth temporal trends and abrupt form changes,yielding time-varying uncertainty bands.

- Mixture models: accommodate multimodal performance regimes (e.g., “in-form” vs “off-form”) and quantify between-regime transition probabilities.

these approaches ensure that the reported handicap is accompanied by transparent measures of reliability rather than a single-point estimate.

From an implementation perspective, computational strategies must balance fidelity and performance. Modern MCMC algorithms such as NUTS enable sampling from complex posterior geometries, while variational inference offers faster approximate posteriors for real-time applications. Model selection and calibration rely on out-of-sample validation metrics-**LOO-CV**, **WAIC**, and posterior predictive checks-to detect misspecification (e.g., underestimating tail risk).Prior sensitivity analyses and partial pooling diagnostics are necessary to prevent over-shrinking of true skill differentials, particularly in small-sample regimes such as junior or senior circuits.

Translating probabilistic model outputs into actionable handicaps and recommendations requires concise presentation and decision rules. Presenting a player’s handicap as a point estimate with a **95% credible interval** supports risk-aware choices in course selection and match formats; for match play, conservative adjustment rules can be derived from the lower bound of the interval. Example condensed outputs:

| Model | Point Estimate | 95% Credible Interval | Compute |

|---|---|---|---|

| Hierarchical Bayes | 12.4 | 10.8-14.2 | MCMC (NUTS) |

| State-space GP | 12.1 | 11.0-13.6 | VI / Sparse GP |

| Bootstrap Frequentist | 12.6 | 11.2-14.0 | Resampling |

Communicating both central tendency and uncertainty empowers golfers and coaches to make statistically informed tactical choices while maintaining transparency about model limitations.

Integrating Course Characteristics Environmental Conditions and Adjusted Scoring Metrics

To operationalize this feature set, practitioners should measure a constrained list of high-impact variables and incorporate them as explicit modifiers. Key candidates include:

- Course geometry: effective playing length, forced-carry hazards, green undulation index.

- Surface characteristics: green speed (Stimp), fairway firmness, rough height.

- atmospheric factors: sustained wind speed/direction, ambient temperature, precipitation intensity.

- Event factors: pin placement aggressiveness, tee-box rotation, pace of play constraints.

This targeted list balances parsimony with explanatory power and facilitates robust estimation with modest data samples.

Analytical adjustment of scores can be formalized via regression or hierarchical models that estimate multiplicative or additive effects on expected strokes. A compact example of estimated adjustment weights (illustrative) is shown below; these coefficients are intended to be applied to baseline expected scores to derive adjusted expectations.

| Factor | Adjustment (strokes) |

|---|---|

| Course Slope (per 10 pts) | +0.08 |

| Wind (per 10 km/h) | +0.12 |

| Rain / Soft Conditions | +0.20 |

| High green Speed (+1 Stimp) | +0.05 |

These short-form coefficients should be validated through cross-validation and recalibrated seasonally.

Implementation within a handicap framework requires transparent rules for when and how adjustments apply; such as, compute an Adjusted Expected Score = Baseline + Σ(Weight_i × Factor_i) and then derive net differentials for handicap calculation. important governance features include auditability of input sensors, minimum-data thresholds before applying environment-based corrections, and sensitivity analyses to ensure that adjustments preserve equitable competition across skill bands. Periodic model retraining and stakeholder-reviewed thresholds will maintain fairness while improving the predictive fidelity of handicaps under diverse playing conditions.

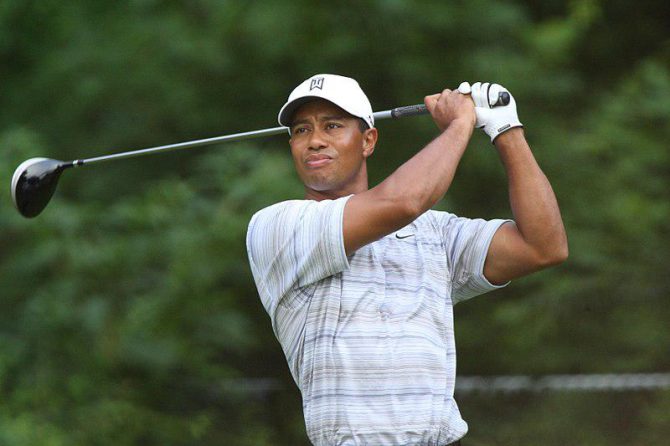

Player-Level Skill Decomposition and Temporal Dynamics for Personalized Assessment

Decomposing a golfer’s performance into constituent skill vectors clarifies which abilities drive handicap variability and which are noise. Using hierarchical factor models and supervised dimensionality reduction, one can isolate latent components such as **driving distance**, **approach accuracy**, **short-game efficiency**, **putting proficiency**, and **course-management coherence**. Each component is estimated with its own reliability metric and cross-validated contribution to round-level scores, enabling statistically principled separation of persistent skill from situational variance. This decomposition supports targeted intervention by translating abstract handicap shifts into actionable skill deficits.

Temporal structure is basic: player ability is not static but evolves with practice, fatigue, coaching interventions, and seasonal conditions. Time-weighted moving averages, exponentially weighted updates, and state-space (latent trend) models provide complementary lenses for capturing both short-term form and long-term learning curves. Bayesian updating allows incorporation of new rounds with prior distributions informed by past decomposed skill vectors, while Kalman-filter approaches can smooth noisy shot-level observations into coherent trajectories of ability. The result is a temporally-aware assessment that reflects both recent performance and durable capability.

Personalized assessments arise by integrating decomposition and dynamics into mixed-effects predictive frameworks that account for individual idiosyncrasies and contextual covariates (course slope,weather,tee selection). Model outputs typically include:

- Estimated current ability per skill component (posterior mean and credible interval)

- Trend slope indicating improvement or decline

- Unexplained variance quantifying volatility and risk in expected scores

- Shot-level probability maps for decision support on strategic play

These outputs permit personalized recommendations-such as, whether to prioritize short-game drills or adopt conservative strategies on long holes-grounded in probabilistic forecasts rather than heuristic judgment.

| Metric | interpretation |

|---|---|

| Ability Index | Aggregate latent score → handicap implication |

| Form Decay | Half-life of recent performance influence |

| Shot Variance | likelihood of high-risk outcomes |

Translating these metrics into practice enables dynamic handicap refinement, informed course selection (matching tee boxes to expected scoring distributions), and shot-level strategy such as when to play aggressively versus conservatively. By quantifying both the components and their temporal dynamics, this analytical framework yields personalized, reproducible guidance to optimize play and development pathways for individual golfers.

Validation Predictive Performance Metrics and Sensitivity Analysis of Handicap Models

Model validation begins with a rigorous partitioning strategy that preserves the temporal and hierarchical structure of golf scoring data (e.g., rounds nested within players, players nested within courses). Use of **k‑fold cross‑validation** with player‑wise splits or **nested cross‑validation** for hyperparameter tuning reduces optimistic bias in performance estimates. Primary predictive metrics should include **RMSE** and **MAE** for continuous shot/score prediction, alongside **R²** and **explained variance** for relative fit; though, emphasis must be placed on out‑of‑sample stability rather than in‑sample goodness‑of‑fit. Reporting of prediction interval coverage (e.g.,90% interval coverage) is essential to communicate uncertainty in handicap forecasts and expected score ranges.

Calibration and ranking fidelity are complementary validation perspectives. Calibration evaluates whether predicted handicaps map to observed scoring distributions, while ranking fidelity assesses the model’s ability to order players by skill. Recommended diagnostics include:

- Calibration plots and reliability diagrams to check bias across handicap strata;

- Rank correlation (Spearman’s rho) to measure ordinal consistency;

- Interval coverage and sharpness to assess uncertainty quantification.

These diagnostics allow practitioners to detect systematic under‑ or over‑prediction for specific cohorts (e.g.,high‑handicap vs. low‑handicap players) and to prioritize remediation strategies such as recalibration or stratified modeling.

Robust sensitivity analysis elucidates which inputs and assumptions drive model outputs. conduct parameter perturbation, bootstrap resampling, and global sensitivity methods (e.g.,**Sobol indices**) to quantify influence of variables such as course slope,tee selection,weather covariates,and recent form weighting. The table below summarizes practical tests and their diagnostic intent.

| Test | Purpose | example Outcome |

|---|---|---|

| Bootstrap resampling | Estimate variance of performance metrics | RMSE ± SD |

| Parameter perturbation | Assess robustness to smoothing/decay rates | Handicap shift magnitude |

| Stratified scenario tests | Reveal subgroup biases | Different calibration slopes |

Final evaluation should prioritize reproducibility and transparency: publish cross‑validated metrics, confidence intervals, and diagnostic plots, and provide a concise set of **recommended thresholds** for acceptable predictive performance (e.g., target MAE relative to standard deviation of scores). Emphasize sensitivity results when recommending model deployment-models that sacrifice a small amount of mean error for substantially improved robustness across courses and player cohorts are often preferable. Documenting both point estimates and their uncertainty enables stakeholders to make informed decisions about handicap interaction, course assignment, and strategy optimization.

Practical Recommendations for Implementation coaching Interventions and Policy Considerations

Implementation, as commonly defined, “refers to the carrying out of a plan” (Vocabulary.com), and this operational lens is essential when translating an analytical handicap framework into practice. For golf clubs and coaching organizations, the immediate imperative is to convert theoretical constructs into **standardized data capture protocols**, robust coach curricula, and aligned governance processes. Practical implementation should therefore foreground three linked aims: **reliability of measurement**, **coaching fidelity**, and **stakeholder alignment** (players, coaches, club administrators). Embedding these aims in a clear logic model will ensure that interventions are not ad hoc but are accountable to predefined outcomes and metrics.

Coaching interventions should be pragmatic, evidence-informed, and scalable. Recommended measures include the following actions and supporting tools:

- Baseline assessment using standardized rounds and validated shot-tracking to establish handicap-relevant performance vectors.

- Coach accreditation modules focused on measurement theory, bias mitigation, and feedback techniques.

- Technology-enabled monitoring (shot-tracking,mobile scoring) to reduce data entry error and increase temporal resolution.

- Structured practice regimens that map drills to handicap domains (consistency, short game, course management).

- Feedback loops that pair quantitative handicap trends with qualitative coach observations to guide adjustments.

| Intervention | Primary Objective | Key Metric |

|---|---|---|

| Baseline assessment | Establish measurement validity | Score variance |

| Coach accreditation | Improve coaching fidelity | Inter-rater agreement |

| Tech monitoring | Enhance data completeness | Missing data rate |

Policy considerations must address governance, equity, and data stewardship. Key policy actions include:

- Establishing a governance board with representation from coaches, players, statisticians, and club management to adjudicate rules and standardization.

- Adopting privacy standards for player data that comply with regional legislation and clarify data ownership and deletion rights.

- Embedding equity safeguards to ensure that handicap recalibrations and access to technology do not systematically disadvantage lower-resource players or clubs.

These policy levers help prevent drift from the analytical framework and protect the integrity and fairness of handicap evaluation.

Monitoring,evaluation,and scaling are the final pillars of accomplished implementation.Implement a phased pilot (6-12 months) with predefined stop/go criteria, followed by a staged rollout that includes continuous monitoring dashboards. Recommended evaluation metrics include:

- Measurement reliability (test-retest correlation)

- Intervention uptake (percentage of coaches completing accreditation)

- player equity indicators (differential impacts by club/resource level)

- Cost-efficiency (administrative cost per participant)

Sustained improvement will require adaptive management: use pilot findings to refine protocols, codify best practices in policy documents, and institutionalize periodic audits to maintain **both technical rigor and practical relevance** as the handicap evaluation system scales.

Q&A

Note on search results

The provided web search results pertain to Analytical Chemistry and are not relevant to the topic of golf handicapping (see examples: pubs.acs.org links). No domain-specific sources on golf handicap analytics were returned. The Q&A below synthesizes established handicap principles (e.g., World Handicap System), statistical modeling practice, and best-practice analytics to create an academically framed analytical framework for golf handicap evaluation.

Q&A – Analytical Framework for Golf Handicap Evaluation

Q1: What is the objective of an analytical framework for golf handicap evaluation?

A1: The objective is to produce an objective,reproducible,and interpretable measure of a golfer’s playing ability (and uncertainty around it) that accounts for course difficulty,playing conditions,and player-specific factors. The framework should support fair competition,monitor performance trends,quantify improvement opportunities,and provide diagnostic outputs that guide coaching and practice.

Q2: How does the proposed framework relate to existing handicap systems (e.g., World Handicap System)?

A2: The framework builds on the principles of systems such as the World Handicap System (WHS), which normalizes scores across courses using Course Rating, Slope Rating, and standardized differentials. It extends these principles by (a) explicitly modeling player and course heterogeneity using statistical models, (b) quantifying uncertainty via inferential methods (frequentist or Bayesian), and (c) incorporating advanced performance metrics (shot-level, strokes gained) when available.

Q3: What are the core components of the framework?

A3: Core components include:

– Data ingestion: round scores, hole/shot-level data, tee information, course rating and slope, weather/conditions, and tournament vs casual play flags.

– Normalization: baseline score differentials (e.g., (Score – courserating) * 113 / Slope) or equivalent adjustments.

– Statistical modeling: mixed-effects or hierarchical models to separate player ability, course effects, and residual variability; time-series components for trends.

– Uncertainty quantification: confidence/credible intervals for handicap estimates.

– Validation and calibration: backtesting, cross-validation, and calibration diagnostics.

– Outputs and diagnostics: handicap index, change-alerts, strokes-gained analytics, and prescriptive practice recommendations.

Q4: What statistical models are most appropriate?

A4: Recommended models include:

– Linear mixed-effects models (LMM) with random intercepts for players and courses to model average ability and course difficulty.

– Bayesian hierarchical models to incorporate prior information and produce full posterior uncertainty.

– state-space or hidden Markov models to capture time-varying ability (form) and regime shifts.- Generalized additive models (GAMs) or GAMLSS when non-linear covariate effects and heteroskedasticity are present.

– Mixture models when players exhibit multimodal performance distributions (e.g., “in-form” vs “out-of-form” states).

Choice depends on data richness, computational resources, and the need for interpretability.

Q5: How should course difficulty and conditions be handled?

A5: Course difficulty is accounted for by Course Rating and Slope Rating or equivalent. The framework should:

– Use official course metrics for normalization.

– Include random effects for course and tee to capture idiosyncratic difficulty.- Incorporate covariates for conditions (wind, precipitation, green speed) if available; include interaction terms if weather effects vary by course.

– Apply adjustment factors for temporary course setup (forward tees, preferred lies).

– Model measurement error in course ratings when course metrics are uncertain.

Q6: How is a handicap index computed within this framework?

A6: A basic approach:

1. Normalize each round: differential = (AdjustedGrossScore − CourseRating) * 113 / Slope (WHS formula) or a model-based equivalent.

2. Use a statistical estimator (e.g., trimmed mean, Bayesian posterior mean/median) over recent differentials or model-derived latent ability.

3. Provide an uncertainty estimate (standard error or credible interval).

Model-based variants replace step (1) with predicted expected score given player and course effects, and compute index as the latent player ability parameter (with scaling to match established handicap scale).

Q7: How should temporal dynamics and sample size be addressed?

A7: Temporal dynamics:

– Implement time-weighting (e.g., exponential decay) or explicitly model ability as a stochastic process (random walk, autoregressive).

– Use state-space models for explicit smoothing and form detection.

Sample size:

– Require a minimum number of rounds for stable estimates; use hierarchical pooling to borrow strength across players when data are sparse.

– Report larger uncertainty for indices based on few rounds and defer significant index changes until confidence is adequate.

Q8: how can shot-level metrics be incorporated?

A8: When shot-level data exist,integrate strokes-gained metrics as covariates or intermediate outcomes to:

– decompose total performance into driving,approach,short game,and putting.

– Improve predictive power and explainability of the handicap metric.

– use multilevel models where strokes-gained components are predictors of score differentials and latent ability.

Q9: How is uncertainty quantified and communicated?

A9: Quantify via standard errors, prediction intervals (frequentist) or credible intervals (Bayesian). Communicate:

– Point estimate of handicap and a 95% interval.

– Confidence in changes (e.g., “index likely improved by ≥1.0 with 90% probability”).

– Decision thresholds for official updates (e.g.,require a high probability that ability changed before altering tournament eligibility).

Q10: How is fairness and robustness ensured across populations?

A10: ensure fairness by:

– Using standardized course metrics and consistent normalization.

– Applying models that adjust for different volumes of play and variance heterogeneity.

– Regularly auditing for systematic biases (gender, age, access to better courses).

– Implementing simulation or policy-testing to see how proposed rules affect various subgroups.

Q11: How should the framework be validated?

A11: Validate with:

– Backtesting: predict future scores and compare errors (RMSE, MAE).

– calibration checks: observed vs predicted percentiles.

– Discrimination metrics: ability to rank players (Spearman rank correlation between index and future performance).

– Skill-based tournament simulation to compare qualifications under current and proposed indices.

– Cross-validation and out-of-sample testing to guard against overfitting.

Q12: what diagnostics should be reported to users and administrators?

A12: Diagnostics include:

– Recent differentials and their distribution.

– Trend plots with uncertainty bands.

– Decomposition into strokes-gained (if available) or hole-level performance.

– Number of rounds used, effective sample size, and recency weights.

– Alerts for anomalous scores or suspected data errors.

Q13: What are the principal limitations of analytical approaches?

A13: Limitations include:

– Data quality issues (inaccurate course ratings, missing shot data).

– Selection bias (players self-select which rounds to report).

– Model misspecification (unmodeled interactions, non-stationary behavior).

– Potential overfitting with too many covariates for sparse players.

– Ethical and privacy concerns with granular tracking.

Q14: How do you handle exceptional scores or outliers?

A14: Options:

– Apply robust estimation (trimmed means, Winsorization).

– Use probabilistic models with heavy-tailed error distributions to down-weight extreme outcomes.

– Maintain rules for discarding or adjusting scores that violate validity checks (e.g., unauthorized assistance, inaccurate scorecards).

Q15: How can the framework inform targeted improvement strategies?

A15: By decomposing performance into component metrics (strokes gained by domain, dispersion, hole handicaps), the framework can:

– identify high-value weaknesses (e.g., lost strokes on approaches).

– Quantify expected stroke improvement from skill improvements using predictive modeling (e.g., a 0.5 stroke gain on approach yields X reduction in index).

– Prioritize practice activities and track intervention efficacy via pre/post comparisons with causal inference techniques (difference-in-differences, matched comparisons).

Q16: What are recommended implementation steps?

A16: Recommended steps:

1.Define goals and governance (competition vs coaching).

2. Gather and validate data sources; standardize inputs.

3. Implement baseline normalization (WHS differentials) for comparability.

4. Build incremental statistical models, starting simple (LMM) and advancing to hierarchical/Bayesian/time-series as needed.

5. Validate extensively and pilot with a subset of users.6. Deploy with transparent documentation and user-facing diagnostics.

7. Monitor performance and recalibrate regularly.

Q17: What are future research directions?

A17: Opportunities include:

– Integrating wearable and sensor data for higher-fidelity performance modeling.

– Developing causal models to estimate the effect of training interventions on handicap.

– Adaptive models that automatically detect and respond to structural breaks in ability.

– Fairness-aware algorithms to mitigate disparities related to access and demographics.

– Real-time prediction and personalized practice prescription using reinforcement learning.

Q18: What privacy and ethical considerations must be observed?

A18: Ensure:

– Data minimization: collect only necessary data.

– Informed consent and transparent use policies.

– Secure storage and controlled access.

– Protections against misuse of granular tracking (e.g., for employment or discriminatory purposes).

– Fairness audits for model-driven decisions affecting competitive eligibility.

Q19: How should policy and governance interact with the analytical model?

A19: Administrators should define rules for official recognition (e.g., minimum rounds, acceptable uncertainty), oversight procedures for model changes, and processes for appeals. The model should be open to independent review and include change-management protocols.Q20: Summary: What are the takeaways for academics and practitioners?

A20: An analytical framework for golf handicap evaluation should combine rigorous statistical modeling, transparent normalization for course and conditions, robust uncertainty quantification, and actionable diagnostics that support fair play and player development.Iterative validation, transparency, and attention to data quality and ethics are essential for credible, adoptable systems.

If you would like, I can:

– Produce a concise methodological appendix (formulas and model specifications).

– Draft a validation protocol and performance metrics to test a candidate model.

– Provide example R or Python pseudo-code for a hierarchical model that estimates player ability.

Conclusion

This article has presented a structured analytical framework for evaluating golf handicaps, synthesizing measurement theory, statistical modeling, and practical considerations of course and weather variability. By decomposing handicap determinants into observable performance components, course-rating factors, and stochastic noise, the framework clarifies how raw scores translate into equitable comparative metrics and identifies the levers-data quality, model specification, and rating precision-that most strongly influence reliability and fairness.Practitioners and researchers can apply these insights to improve handicap estimation, optimize tournament pairings, and design targeted training interventions.

The findings carry implications beyond golf: the methodological principles-rigorous validation, sensitivity to measurement error, and continual recalibration-mirror best practices in other analytical domains. Just as advanced analytical methodologies enhance detectability and accuracy in laboratory sciences, robust statistical design and instrumented data capture (e.g., shot-level telemetry) will strengthen handicap systems and expand their utility for performance assessment and talent development.

Limitations of the present treatment include dependence on available score histories and simplified assumptions about player behavior; future work should incorporate longitudinal learning models, causal inference on intervention effects, and cross-validation with high-resolution tracking data. Empirical testing across diverse courses and competitive levels will be essential to quantify external validity and to refine adjustment protocols for atypical conditions.

In sum, adopting an explicit, analytically grounded approach to handicap evaluation promotes transparency, equity, and actionable insight.Continued collaboration between statisticians, course raters, and coaching practitioners will be pivotal to translating this framework into operational improvements that advance both competitive integrity and player development.

Analytical Framework for Golf Handicap Evaluation

Why an analytical framework matters for your golf handicap

Understanding the mechanics behind a golf handicap-how it’s calculated, what drives changes, and how to translate it into on-course strategy-gives you control over improvement. A rigorous analytical framework helps players, coaches, and clubs make evidence-based decisions: choose the right tees, select practice focuses, and adapt tactics during competition.

Core concepts and definitions (SEO: golf handicap, handicap index, course rating, slope)

- Handicap Index - a standardized measure of a golfer’s potential ability used to compare players of varying skill. most associations using the World Handicap System (WHS) derive it from recent score differentials.

- Score Differential – the normalized score for one round, accounting for course difficulty (see formula below).

- Course Rating – an estimate of the expected score for a scratch (zero-handicap) golfer on a particular set of tees.

- Slope Rating – how much harder the course plays for a bogey golfer compared to a scratch golfer; slope normalizes conditions across courses.

- Course Handicap - the number of handicap strokes a player receives for a specific course and tees (converted from Handicap Index using slope).

- Playing Handicap – the course handicap adjusted for the competition format (team play allowances, match play, etc.).

Essential formulas (clear,accurate and actionable)

Use these formulas when analyzing or verifying your handicap calculations.

- Score Differential = (Adjusted Gross Score − Course Rating) × 113 ÷ Slope Rating

- Course Handicap = Handicap Index × (Slope Rating ÷ 113) - round per your local association rules

- Adjusted Gross Score - your gross score after applying maximum hole scores for handicap purposes (under WHS: Net Double Bogey is the maximum per hole for handicap calculation)

How Handicap Index is commonly derived

Under modern handicap systems like the World Handicap System, Handicap Index is typically generated from the most recent series of differentials. A widely used approach:

- Compute score differentials for recent rounds (using the Score Differential formula)

- apply any local Playing Conditions Calculation (PCC) adjustments if conditions were unusually easy or hard

- Average the best differentials from the recent sample (for WHS this is commonly the lowest 8 of the last 20 differentials; check your local governing body for exact details)

- Apply caps or limits defined by the association (e.g., upward/downward movement limits)

Example calculations – quick reference (SEO: handicap calculation example)

| Item | Value | Notes |

|---|---|---|

| Adjusted Gross Score | 91 | Net Double Bogey applied were needed |

| Course Rating | 72.3 | Set by course rating authority |

| Slope Rating | 130 | Typical slope for a moderately tough course |

| Score Differential | (91 − 72.3) × 113 ÷ 130 ≈ 16.26 | Normalized one-round performance |

| Handicap Index → Course Handicap | 15.2 × 130 ÷ 113 ≈ 17 | Round to nearest whole number per local rules |

Data-driven KPIs to track (SEO: lowering handicap, golf statistics)

Lowering a handicap requires targeted improvements. Track these key performance indicators to identify where strokes are lost and where the highest ROI for practice lies:

- Greens in Regulation (GIR) - frequency of hitting the green in regulation attempts

- Proximity to Hole – average distance from the hole for approach shots (measured yards)

- Scrambling% – percentage of times you save par when missing the green

- Putts per Round & Putts per GIR - reveals short game/putting efficiency

- Strokes gained metrics (if available) – breakdown into off-the-tee, approach, around the green, and putting

Practical tips to optimize handicap using the framework (SEO: golf practice tips)

Turn analytics into action with these practical, evidence-based steps:

- Collect consistent data: log full rounds (hole-by-hole scores, tee used, weather, course conditions). Use a golf app or spreadsheet.

- Normalize scores: apply Net Double Bogey for handicapping, then compute differentials for each round so your data set is comparable across courses and conditions.

- Segment weaknesses: analyze KPIs to isolate problems (e.g., high putts per GIR means focus on putting drills; low GIR suggests working on approach shots).

- Prioritize drills by ROI: pick practice activities that reduce the most strokes (e.g., 3-6 yard proximity drills often produce big gains).

- Match tees to ability: play tees that produce a Course Handicap appropriate to your skill to enjoy competitive rounds and realistic scoring experiences.

- Account for format: apply playing handicap allowances for the competition format so your net strokes reflect the event rules.

- Monitor trends, not single rounds: the Handicap Index is designed to reflect potential-use rolling averages to gauge progress.

Competition formats and handicap allowance examples (SEO: playing handicap, match play)

Different formats require altering your Course Handicap (a Playing Handicap). Here are common examples-always verify with the tournament’s committee for official allowances:

| Format | Typical Handicap Allowance | Use |

|---|---|---|

| Singles Stroke Play | 100% | Full Course Handicap applied |

| Four-Ball (Better Ball) | ~85% (team) | Team allowance used to reflect teammate benefit |

| Foursomes (Alternate Shot) | ~50% | Significant reduction due to format difficulty |

Case study: Turning analytics into a 3-stroke improvement (SEO: handicap improvement case study)

Scenario: Alex, Handicap Index 15.2, plays 20 recorded rounds across multiple courses. After computing differentials and KPIs, Alex finds:

- GIR at 28% (below peer average)

- Putts per round = 33, putts per GIR = 2.6

- Scrambling = 38%

Action plan derived from the framework:

- Practice focused approach shots into a target: two weekly sessions, aiming at reducing approach spread and increasing GIR by 10 percentage points.

- Short game + bunker sessions to raise scrambling to 50%.

- Putting routine improvement: 10 minutes daily focused on lag putting and 3-footers to reduce putts by 1 per round.

After three months, Alex tracks changes: GIR +12%, putts −1.2 per round, scrambling +9%. The resulting differentials trend down, and the Handicap Index drops about 2-3 strokes-matching the program’s expectation.

Hole-level analysis: where to apply handicap strokes (SEO: hole-by-hole strategy)

Use the course handicap stroke allocation (the hole stroke index) combined with your statistical weaknesses to make smarter hole-by-hole decisions:

- On holes where you receive strokes,play more aggressively when a birdie chance exists,but avoid high-risk strategies that can lead to triple bogeys.

- On holes where you give strokes, focus on minimizing big numbers-play smart and keep the ball in play.

- Use yardage books and GPS to identify bailout zones and set conservative targets when the hole demands precision.

Implementation checklist for clubs and coaches (SEO: golf club handicap management)

If you’re a coach or club administrator, the following checklist helps implement this analytical handicap framework locally:

- Ensure members log full-hole scores and course/tee identifiers

- Provide simple score cards or a mobile app integration for data collection

- Offer periodic education on Course Rating/Slope and how handicaps convert between tees

- Run monthly analytics reports showing trends by player, tee, and hole

- Design clinics targeted to the most common weaknesses revealed by club data

First-hand experience: how tracking changed my decision-making on course

After logging 30 rounds and calculating differentials, I started choosing tees that matched my Course Handicap. the immediate benefits were:

- More realistic expectations on par and scoring

- Clear focus during practice (e.g., aim to reduce approach dispersion vs.random range time)

- Better club selection decisions based on proximity trends and carry requirements

By pairing objective metrics (GIR, proximity) with the handicap framework, I converted practice time into measurable handicap gains.

common pitfalls and how to avoid them

- Relying on single-round results: one great or poor round can mislead-use rolling data.

- Ignoring course setup: playing conditions (greens firm,wind) affect differentials-apply Playing Conditions Calculation where available.

- Practicing aimlessly: without KPI alignment, practice may not reduce handicap-use the framework to prioritize.

- Not verifying local rules: handicap formulas, rounding, and allowances may differ by association-confirm local regulations.

Tools and technology to support the framework (SEO: golf analytics tools)

Leverage technology to scale this framework:

- Golf stat-tracking apps (hole-by-hole logging, GPS, tee selection)

- Shot-tracking systems (for proximity & strokes gained analysis)

- Spreadsheet templates for computing differentials and rolling Handicap Index summaries

- Club management systems to publish course ratings, slope, and enable automatic index updates

Next steps: apply the framework and test improvements

Start by logging 5-10 rounds, compute the differentials, and derive your immediate course handicaps. use the KPIs in this article to prioritize 2-3 practice objectives and re-evaluate after 8-12 weeks. The analytic approach turns the abstract “handicap” number into actionable performance targets.

For official rules, rounding conventions, caps, and local allowances, always consult your national golf association or the World Handicap System documentation.