Introduction

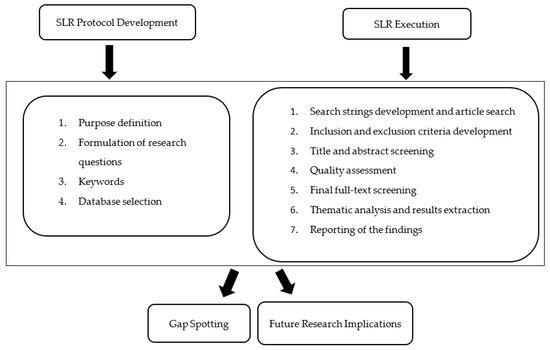

Precision in equipment design and selection is a essential determinant of performance in golf, yet systematic, quantitatively rigorous evaluations of golf clubs, shafts, and grips remain uneven across the literature and industry practice. Quantitative analysis-grounded in repeatable measurement, statistical inference, and robust experimental design-offers the means to move beyond subjective assessment and anecdote, to characterize how specific equipment attributes influence ball flight, shot dispersion, and player biomechanics.by framing equipment performance in measurable terms, researchers and practitioners can identify effect sizes, quantify trade-offs, and establish evidence-based recommendations for players of varying skill levels.

This article develops an integrated quantitative framework for assessing golf equipment performance. We synthesize measurement approaches commonly used in the physical and social sciences-precision instrumentation (e.g., high-speed kinematics, launch monitors, force sensors), controlled experimental protocols, and inferential statistics (e.g., regression modeling, variance analysis, and reliability testing)-to produce standardized metrics of distance, dispersion, launch conditions, and biomechanical interaction. Attention is given to validity and reliability: calibration procedures,repeatability trials,and treatment of measurement error are embedded in the methodology to ensure that observed differences reflect true equipment effects rather than procedural artefacts.

Our objectives are threefold: (1) to operationalize key performance constructs for club head design, shaft dynamics, and grip ergonomics; (2) to demonstrate statistical approaches that isolate equipment contributions from player variability; and (3) to discuss practical implications for equipment growth, fitting, and regulatory assessment. By offering a rigorous quantitative pathway from data collection to inference, this work aims to bridge gaps between engineering, biomechanics, and applied coaching, supporting decisions that optimize on-course performance while maintaining scientific clarity.

Precision Characterization of Clubhead Geometry and Surface Properties with Recommendations to Optimize Launch Conditions

High-fidelity quantification of clubhead geometry now relies on a combination of non-contact optical metrology and volumetric imaging to produce repeatable, traceable metrics. Techniques such as structured-light 3D scanning, confocal laser profilometry, and micro-CT yield precise measures of face curvature radius, loft distribution, and cavity volume with sub-0.1 mm resolution. These metrics enable calculation of derived parameters-center of gravity (CG) coordinates, polar and transverse moments of inertia (MOI), and the face’s effective loft map-that directly inform launch-angle and spin predictions in physics-based ball-club impact models.

Surface characterization must extend beyond nominal groove geometry to include microtexture and roughness spectra that influence wet- and dry-condition friction and aerodynamic spin generation. Standardized outputs include arithmetic mean roughness (Ra), root-mean-square roughness (Rq), and autocorrelation length; groove cross-section is reported as width × depth × edge radius. Empirical correlations show that incremental increases in Ra and sharper groove edge radii increase peak spin for wedges at moderate impact angles but can produce diminishing returns at driver-like speeds due to contact-time reduction and surface asperity deformation.

To translate measured geometry and surface properties into optimized launch conditions, implement targeted hardware adjustments and fitting strategies. Key recommendations include:

- Loft modulation: Adjust loft ±1-2° to trade launch angle against spin while monitoring carry and descent angle.

- CG manipulation: Move CG forward to lower spin for drivers; rearward and low to increase launch and forgiveness for long irons.

- Face and groove tuning: For approach and short-game clubs,increase microtexture and maintain groove sharpness within governing tolerances to maximize controllable spin.

Below is a concise decision matrix summarizing typical geometric metrics and the recommended short-term adjustment to improve launch windows for representative club categories. Use these as first-order guidance prior to personalized launch monitor validation.

| Metric | Driver | Wedge |

|---|---|---|

| CG (mm from face) | Forward → Reduce spin | Rear → increase launch/spin |

| Face curvature | Neutral/low bulge for consistent launch | Higher curvature for shot-shaping |

| Surface roughness (Ra) | Lower Ra at high speeds | Higher Ra to maximize spin |

Robust measurement protocols and statistical quality control are essential to ensure that geometric and surface optimizations deliver predicted launch improvements. Calibrate instruments against gauge blocks and roughness standards before each campaign, control ambient humidity and temperature, and collect replicate impacts across a matrix of launch-angle and speed conditions. Integrate high-speed impact imaging, CFD-informed proximity aerodynamics, and TrackMan/gcquad outputs to close the loop between laboratory characterization and on-course performance; a 1-3% improvement in carry and a 5-10% reduction in spin variance are realistic targets when measurement uncertainty is constrained and iterative fitting is applied.

quantitative Assessment of Shaft Dynamic Response and Guidance for frequency Tuning and Damping Selection

Quantitative characterization of shaft dynamics centers on the identification of modal parameters-**natural frequencies**, **modal damping ratios (ζ)**, and **mode shapes**-and their mapping to performance observables such as vibration amplitude at the grip, effective tip inertia, and transient energy transfer at impact.The primary bending and coupled bending‑torsion modes dominate player sensation and clubhead kinematics; these can be compactly represented by modal models in wich the fundamental frequency f1 ≈ (1/2π)·√(k/m) and the damping ratio ζ controls decay time and resonance peak amplitude. For rigorous assessment, these modal parameters should be reported with uncertainty bounds (±σ) derived from repeated modal extractions.

Measurement protocols combine controlled excitation (impulse hammer or electrodynamic shaker) with high‑bandwidth sensing (tri‑axial accelerometers or laser doppler vibrometry) and spectral analysis (windowed FFT,coherence,and curve‑fit modal identification). Typical observed fundamental bending frequencies under standard swing boundary conditions fall approximately in the ranges: drivers: 40-60 Hz, woods/hybrids: 50-80 Hz, and irons: 70-110 Hz, though manufacturer geometries and shaft lengths produce systematic shifts. Reported damping ratios for composite shafts commonly range from ~0.02 to 0.08; documenting both global and local (tip, mid, butt) damping improves fidelity of player‑centric predictions.

- Define objective: prioritize ball‑speed maximization, vibration minimization, or launch/angle control.

- Target frequency: select fundamental frequency band to match swing tempo (slower tempos trend toward lower target f1).

- Adjust stiffness: alter tip/lower‑mid stiffness to tune f1 without excessive mass redistribution.

- Control damping: introduce viscoelastic layers, resin treatments, or internal tapers to raise ζ within 0.03-0.08 for reduced harshness.

From a quantitative trade‑off viewpoint, multi‑physics models and experimental validation reveal monotonic but nonlinear relationships: raising f1 (stiffer shaft) reduces deflection and can improve launch stability for high‑tempo players but increases transmitted high‑frequency content at the grip; increasing ζ reduces resonant amplification and perceived vibration at the cost of modestly increased energy dissipation. Sensitivity analysis using finite element modal reductions and rigid‑body impact coupling indicates that small changes in effective tip compliance produce measurable changes in clubhead rotational acceleration and thus influence launch conditions; practitioners should therefore evaluate performance in normalized coefficients (e.g., percent change per 1% stiffness change) rather than absolute single‑point metrics.

Implementation guidance for testing and selection urges an evidence‑based workflow: conduct baseline modal testing,simulate impact coupling with the measured modal model,and iterate tuning interventions while tracking objective metrics (ball speed,launch angle,vibration RMS at grip). Recommended empirical target bands for initial tuning are: driver f1 ≈ 42-58 hz, fairway/utility 48-72 Hz, irons 72-105 Hz, with damping ratios aimed at ζ = 0.03-0.07 depending on player sensitivity. Final selection should balance these quantified predictions with subject‑matched psychophysical validation (player feel and consistency tests) to ensure that engineered improvements translate to on‑course performance gains.

Grip Ergonomics Evaluation and Recommendations to Enhance stroke Consistency and Minimize Injury Risk

Contemporary evaluation of hand-piece interaction emphasizes measurable attributes of grip ergonomics: **pressure distribution**, **circumferential fit**, and **material compliance**. Quantifying these variables enables correlation of equipment parameters with kinematic consistency and musculoskeletal loading. Studies employing pressure-mapping insoles analogues for grips demonstrate that uneven radial pressure and excessive palmar loading correlate with increased shot dispersion and elevated peak tendon stress during eccentric deceleration. Therefore,an evidence-based assessment must treat grip ergonomics as multivariate-integrating scalar metrics (peak pressure,mean contact area) with angular metrics (wrist deviation,supination angle) to characterize the ergonomic envelope of a given club-grip configuration.

Objective measurement protocols should combine sensor-based and observational modalities to produce reproducible datasets suitable for statistical analysis. Recommended instrumentation includes high-resolution grip pressure mats, 3D motion capture for wrist and forearm kinematics, and surface electromyography (sEMG) to quantify muscular activation patterns. The following compact reference table summarizes pragmatic target ranges derived from normative performance cohorts and injury-avoidance criteria:

| Metric | Target Range | Rationale |

|---|---|---|

| Peak Grip Pressure | 20-35 N/cm² | Maintains control without overloading flexor tendons |

| wrist Deviation (radial/ulnar) | ±10° from neutral | Optimizes face control and reduces shear stress |

| sEMG Co-contraction Index | Low-moderate (relative) | Supports efficient energy transfer, less fatigue |

To enhance stroke consistency, implement targeted equipment and technique adjustments grounded in the quantitative profile. Key interventions include:

- Grip diameter optimization – select a circumference that reduces excessive finger flexion while preserving tactile feedback.

- Pressure banding – train players to maintain a pressure window (e.g., ±15% of baseline) through biofeedback drills to stabilize clubface orientation at impact.

- Material damping selection – employ grips with graded viscoelastic properties to attenuate high-frequency vibrations that disrupt fine motor control.

minimizing injury risk requires both equipment-centric and physiologic strategies. Reducing peak repetitive loads through incremental modifications-softer grip compounds, slightly larger diameters for players with hyperextension tendencies, and neutral wrist alignment cues-decreases cumulative tendon strain. Concurrently, conditioning programs that emphasize eccentric strengthening of wrist flexors/extensors and proprioceptive training can shift loading away from vulnerable tendinous insertions. Clinical monitoring should flag persistent deviations in pressure or sEMG patterns for early intervention.

An operational protocol for practitioners integrates baseline ergonomics testing, individualized equipment prescription, and longitudinal monitoring. Baseline: record grip-pressure maps, kinematics, and sEMG during standardized swings. Prescription: adjust grip size, texture, and shaft torque to align measured metrics with target ranges. Follow-up: reassess quarterly or after any change in performance complaints, using the same instrumentation to quantify progress. This closed-loop, data-driven approach balances stroke consistency gains with proactive injury prevention, enabling rational trade-offs between maximal performance and long-term musculoskeletal health.

Integrated Ball Flight Modeling Combining Multibody Dynamics and Aerodynamic Coefficients for Predictive Performance Analysis

Contemporary simulation frameworks synthesize rigid-body multibody dynamics of the club-ball interaction with post‑impact trajectory integrators to produce physics‑based predictions of shot outcome. By explicitly resolving contact mechanics during the impact interval, the model captures transient impulse transfer, face deformation effects approximated via contact stiffness parameters, and resultant launch conditions (velocity vector, spin vector, and angular rates).This coupling yields initial conditions that are physically consistent with conservation laws and enables downstream sensitivity analysis of how subtle changes in club geometry or swing kinematics alter performance metrics. Contact dynamics and energy partitioning are thus treated as first‑order drivers of predictive fidelity.

Post‑impact flight is governed by aerodynamic force and moment coefficients which parameterize complex fluid phenomena into tractable inputs for trajectory propagation. The most influential coefficients are Cd (drag), Cl (lift due to the Magnus effect), and Cm (aerodynamic moment), each of which depends on spin rate, Reynolds number, and surface roughness. Empirical calibration of these coefficients-using wind‑tunnel data or high‑fidelity CFD-anchors the model in observed physics while preserving computational efficiency.

| Coefficient | Role | Representative Range |

|---|---|---|

| Cd | Resists forward motion | 0.2-0.5 |

| Cl | Generates lift (spin dependent) | 0.05-0.25 |

| Cm | Induces aerodynamic pitching | -0.02-0.02 |

To render the integrated model practical for predictive performance analysis, efficient numerical strategies are employed: implicit time‑stepping for stiff contact phases, adaptive Runge-Kutta propagation for free flight, and surrogate modeling (e.g., Gaussian processes or polynomial chaos) to accelerate parametric sweeps. Model outputs typically include carry distance, lateral deviation, apex height, and impact spin components. Practical benefits include:

- Rapid design iteration through surrogate‑aided optimization;

- Robust uncertainty quantification enabling confidence intervals on predicted carry under sensor noise;

- Model‑based fitting that maps equipment geometry and swing inputs to player‑centric performance metrics.

Validation against launch‑monitor ensembles and controlled wind‑tunnel campaigns is essential to close the loop between simulation and reality. Comparative studies should report not only mean absolute error in trajectory metrics but also bias in spin and launch‑angle prediction. When applied to equipment development, the integrated approach supports multi‑objective optimization-trading off forgiveness, distance, and spin stability-while providing quantified sensitivity to manufacturing tolerances. In operational deployments,practitioners should prioritize calibrated aerodynamic coefficients and measured contact parameters to maintain predictive reliability across diverse playing conditions.

Experimental Protocols and Instrumentation Standards for Reproducible Laboratory and On Course Measurements

Experimental designs must begin with rigorous control of environmental and specimen variables to ensure comparability between laboratory and on‑course assessments. Critical conditions-ambient temperature (±1 °C), relative humidity (±5%), and surface firmness-should be documented and, where possible, controlled via climate chambers or standardized turf beds. Ball and club specimens require preconditioning protocols (minimum 24‑hour equilibration in test habitat) and batch sampling to mitigate production variance. Establishing and reporting acceptance criteria for input materials (e.g., ball compression, club loft ±0.2°) is essential to distinguish true performance differentials from specimen heterogeneity.

Instrumentation must adhere to traceable calibration schedules and documented uncertainty budgets. Primary measurement systems typically include:

- High‑speed photogrammetry (≥2,000 fps) for kinematic reconstruction,

- radar/laser launch monitors with verified velocity accuracy (±0.2 m·s⁻¹),

- Force and pressure platforms for impact dynamics, and

- spin measurement systems validated against spin rig standards.

all sensors must be cross‑validated against reference standards annually, and calibration certificates should be retained with each dataset to support reproducibility claims.

Standard operating procedures for test execution must define the number of replicates,randomization,and operator training. A minimum of 20 valid impacts per club‑ball combination is recommended for population‑level inference, with outlier removal criteria pre‑specified (e.g., >3σ from mean clubhead speed). the following table summarizes exemplary acceptance tolerances used to flag suspect runs during analysis:

| Parameter | tolerance | Action |

|---|---|---|

| Ball speed | ±0.5 m·s⁻¹ | Repeat impact |

| Launch angle | ±1.0° | Inspect alignment |

| Spin rate | ±50 rpm | Calibrate spin sensor |

Data acquisition strategies must prioritize temporal resolution and synchronization to reduce measurement artifacts. Use common timebases (GPS disciplined or network time protocol) to align multi‑sensor streams and sample at rates that preserve relevant dynamics (minimum 10× nyquist for highest frequency content). Apply clear preprocessing: report filters (type, cutoff), coordinate transforms, and interpolation methods. Quantify propagated uncertainty by Monte Carlo or analytical error propagation, and accompany point estimates with confidence intervals to enable meaningful comparison across studies.

Quality assurance extends beyond single‑lab rigor to include inter‑laboratory comparisons and open reporting standards. Implement periodic round‑robin exercises to compute reproducibility metrics such as coefficient of variation (CV) and intraclass correlation coefficient (ICC), with predefined thresholds for acceptable inter‑lab agreement (e.g., CV <3% for ball speed). Maintain comprehensive metadata and raw data archives, and provide a minimal reporting checklist to ensure reuse:

- Instrument models, calibration dates, and uncertainty estimates

- Specimen preparation and conditioning steps

- Data processing algorithms and filter parameters

- Statistical methods for outlier treatment and uncertainty quantification

Adherence to these procedural and instrumentation standards will improve reproducibility and facilitate cumulative advances in quantitative golf equipment assessment.

Statistical Methods and Uncertainty Quantification for Robust Performance Comparison and evidence Based Selection

Quantitative evaluation of golf equipment demands a rigorous statistical framework: statistics is an interdisciplinary science concerned with designing studies, estimating parameters, and quantifying uncertainty so that inferences drawn about clubheads, shafts, and grips are reproducible and defensible. Experimental designs that incorporate randomization, blocking (e.g., player or session), and repeated measures reduce bias and improve the precision of estimated effects. Attention to sampling distributions and the assumptions underlying inferential procedures ensures that reported differences reflect true performance variation rather than measurement noise or confounding factors.

Analytical tools should be chosen to match the structure of the data and the decision problem. Commonly employed methods include:

- Analysis of Variance (ANOVA) and mixed-effects models to partition variance between players, sessions, and equipment.

- Linear and generalized linear models for covariate adjustment (e.g.,ball speed,launch angle,weather).

- Bootstrap and resampling techniques for nonparametric interval estimation when assumptions are questionable.

- Bayesian hierarchical models to pool information across clubs or players and produce direct probability statements about parameters.

- Equivalence and non-inferiority tests to support evidence-based selection when differences are small but practically meaningful.

Uncertainty quantification is central to robust comparisons: measurement error from launch monitors, manufacturing tolerances, environmental variability, and intra-player inconsistency all contribute to total uncertainty. Propagation of these uncertainties via monte Carlo simulation or Bayesian posterior predictive checks yields credible/confidence intervals for key performance metrics (carry, dispersion, spin). Model calibration against ground-truth measurements and cross-validation for predictive performance are essential steps to avoid overconfident conclusions and to assess generalizability across playing conditions.

robust selection requires blending statistical importance with practical relevance. Effect size estimation, minimum detectable differences determined by power analysis, and multiplicity control (e.g., false discovery rate) prevent overinterpretation of spurious findings. Use mixed-effects frameworks to isolate equipment effects from player random effects and apply decision-theoretic criteria (utility functions) when trade-offs exist between distance, accuracy, and feel. Where applicable, present both population-level estimates and individualized predictions to inform manufacturer targeting and consumer choice.

| Uncertainty Source | Typical Method | Key Output |

|---|---|---|

| Measurement error | Bootstrap / Calibration | CI for launch parameters |

| Player variability | Mixed-effects model | Between/within-player variance |

| Manufacturing spread | Monte Carlo tolerance analysis | Predictive range of outcomes |

reporting standards should be explicit and reproducible; at minimum publish:

- Sample sizes, randomization/blocking scheme, and raw summary statistics

- Model specifications, uncertainty intervals (CI or credible intervals), and power calculations

- Data and code availability statements to enable autonomous verification

Multiobjective Optimization Strategies for Balancing Distance, Accuracy, and Forgiveness in Equipment Design

Contemporary equipment development requires reconciling three intrinsically competing objectives: maximizing carry and total distance, minimizing shot dispersion to improve accuracy, and increasing the tolerance to off-center impacts to enhance forgiveness. Multiobjective formulations treat these outcomes as simultaneous objectives rather than collapsing them into a single scalar metric; this preserves trade-off information and yields a set of Pareto-efficient designs from which stakeholders can choose. Quantitative cost functions are constructed from ball-launch kinematics, impact physics, and human-in-the-loop variability models, enabling rigorous comparison across heterogeneous design candidates.

Algorithmic strategies to explore the design space include classical techniques and modern metaheuristics. Common approaches are:

- Weighted-sum optimization for rapid prototyping where priorities are known;

- Multiobjective evolutionary algorithms (MOEAs) such as NSGA-II for capturing diverse Pareto fronts;

- Surrogate-assisted optimization that couples high-fidelity finite-element or computational fluid-dynamics models with Gaussian-process or neural-network emulators to reduce evaluation cost;

- Robust optimization to ensure performance under player variability and manufacturing tolerances.

These strategies are chosen based on available computational budget, problem dimensionality, and the need to quantify uncertainty.

A practical parametrization for optimization commonly includes clubhead geometry (face curvature, moment of inertia), center-of-gravity location, face stiffness map, loft and lie angles, and shaft dynamic properties. Constraints encode regulatory limits (e.g.,COR),mass budget,and manufacturability. the following illustrative table summarizes three hypothetical pareto-optimal design archetypes that emerged from a multiobjective run; values are normalized to a baseline driver and are intended to demonstrate typical trade-offs.

| Design Variant | Relative distance | Accuracy (deg) | Forgiveness Index |

|---|---|---|---|

| Max-Distance | +7% | ±2.8 | 0.65 |

| Balanced | +3% | ±2.0 | 0.85 |

| High-Forgiveness | -1% | ±1.9 | 1.10 |

Model validation is essential: combine high-fidelity physics simulation (impact FEA, aerodynamic flight models) with instrumented launch-monitor testing and player trials to close the loop between in-silico predictions and on-course performance.Employ cross-validation across distinct player archetypes (e.g., high-speed, moderate-accuracy, high-variability) and use statistical hypothesis testing to ensure that apparent gains on the Pareto front are robust. Emphasize out-of-sample validation to detect overfitting of surrogate models to a narrow subset of swing dynamics.

For implementation, present designers and product managers with the Pareto set augmented by metadata: expected player archetype, sensitivity to manufacturing variance, and estimated cost of implementation. Recommended decision heuristics include selecting compromise designs near the knee of the Pareto curve or offering multiple SKUs targeted to distinct segments. Practical deployment should also consider regulatory compliance, marketing differentiation, and a feedback loop where post-launch telemetry informs subsequent optimization cycles-thereby converting the Pareto front from a static artifact into a living design roadmap.

Validation Framework and Field Testing recommendations for Player Specific Calibration and Longitudinal Performance Monitoring

Establishing a rigorous framework begins with a clear operationalization of validation-understood as the systematic act of confirming that measurement systems and protocols produce correct, reliable outputs under intended use. For golf equipment performance, this means demonstrating that sensors, launch monitors, and analytics pipelines meet predefined accuracy and precision criteria across relevant shot types and environmental conditions. Validation must therefore be framed not as a one-time certification but as an ongoing process that underpins the credibility of player-specific calibration and longitudinal monitoring programs.

Core components of the proposed framework include the following integrated elements:

- Instrument validation: laboratory and field checks against metrological standards;

- Player-specific calibration: individualized parameter adjustment using controlled, repeatable shot sets;

- Environmental control and covariate logging: wind, temperature, humidity, turf conditions;

- Data integrity and preprocessing: automated filtering, outlier detection, and synchronization across devices;

- Statistical acceptance criteria: predefined limits for bias, precision, and repeatability.

Each element should be codified in standard operating procedures to ensure reproducibility and regulatory-grade documentation.

Field testing protocols should prioritize representativeness and statistical power. Recommended parameters include stratified sampling of shot types (drives, irons, chips, putts), randomized block designs to mitigate fatigue and learning effects, and minimum repeat counts per condition to achieve target precision. the following table summarizes suggested metrics and sampling cadence for routine field validation:

| Metric | Target Precision | Sampling Frequency |

|---|---|---|

| Carry Distance | ±0.5% SD | Weekly (practice blocks) |

| Ball Speed | ±0.3% SD | Per session (selective) |

| Club Path/Face Angle | ±0.5° | Monthly (equipment change) |

for longitudinal performance monitoring, adopt a hybrid analytics approach combining descriptive control charts with inferential mixed-effects models to separate within-player variability from equipment-induced shifts. Establish a baseline period (e.g., 4-8 weeks of stabilized play) to compute individualized norms and confidence bands. Use rolling averages and exponentially weighted moving averages for short-term trend detection, and set conservative trigger thresholds (e.g., shifts exceeding 2 standard deviations or persistent trends over 3-5 sessions) to prompt recalibration or forensic review. Emphasize the use of metadata to annotate interventions (new grip, loft change, injury) so that causal attribution is statistically robust.

Implementation should produce standardized deliverables that facilitate decision-making and external review. Recommended outputs include:

- Validation report: instrumentation specs, test matrices, bias/precision estimates;

- Calibration ledger: timestamped player parameter adjustments and rationales;

- Longitudinal dashboard: visual control charts, trend statistics, and automated alerts;

- Data packet for external audit: raw and processed files, preprocessing code, and metadata schema.

Adopt open data formats and version-controlled analytics pipelines to ensure reproducibility, enable external validation, and support continuous improvement of both equipment and player-specific calibration strategies.

Q&A

1. What does “quantitative analysis” mean in the context of golf equipment performance?

Answer: Quantitative analysis refers to the systematic measurement and numerical characterization of equipment attributes and their effects on ball flight and player outcomes. It emphasizes measurable variables (e.g., clubhead speed, ball speed, launch angle, spin rate, moment of inertia) and statistical or physics-based models to test hypotheses, quantify relationships, and support evidence-based decisions.(See general definitions of quantitative versus qualitative approaches [1-3].)

2.why is a quantitative approach critically important for evaluating golf equipment?

answer: A quantitative approach provides objective, reproducible evidence about how design variables influence performance, enables comparison across designs and conditions, supports optimization and trade-off analysis (distance vs. dispersion, durability vs. COR), and informs regulations and consumer selection. It reduces reliance on subjective impressions and isolates signal from noise through controlled testing and statistical inference.

3. What primary performance metrics should be measured?

Answer: Core measured metrics include clubhead speed, ball speed, smash factor (ball speed/clubhead speed), launch angle, launch direction, backspin and sidespin rates, spin axis, carry distance, total distance, lateral dispersion, and post-impact ball velocity vector. Secondary metrics include impact location (face coordinates), face angle, loft effective, center of gravity (CG) position, moment of inertia (MOI), coefficient of restitution (COR), and vibration/acceleration signatures of shafts and grips.

4. What instruments and test setups are commonly used?

Answer: Common instruments include Doppler radar launch monitors (e.g., TrackMan, FlightScope), photometric high-speed camera systems, indoor and outdoor launch tunnels, instrumented impact rigs/swing robots, strain gauges and accelerometers on shafts and heads, 3D laser scanners for geometry, coordinate measuring machines (CMM), and material testing devices (tensile testers, DMA). Controlled environments (temperature, humidity, wind) and standardized balls are essential for reproducibility.

5.How do lab impact rigs and swing robots complement player testing?

Answer: Impact rigs provide precisely repeatable strikes (impact location, speed, angle, and face orientation) to isolate equipment effects from human variability, enabling controlled studies of loft, face design, or shaft coupling. Swing robots and instrumented human testing together offer a balance: rigs quantify pure equipment response; players evaluate real-world integration, feel, and variability.

6. Which physical and computational models are used to relate equipment geometry to ball flight?

Answer: Ball flight models use Newtonian mechanics with aerodynamic forces (drag and lift) parameterized by spin and speed; many use empirically derived lift/drag coefficients as functions of Reynolds number and spin factor. clubhead-ball collision models use conservation of momentum and COR to estimate post-impact velocities and spin, accounting for effective loft and gear-effect torque. Finite element analysis (FEA) is used for stress, deformation, and modal analyses of heads and shafts; multibody dynamics models simulate club-shaft-hands coupling. Monte Carlo simulations propagate measured variability to predict distributions of carry and dispersion.7. What statistical methods are essential for rigorous analysis?

Answer: Key methods include descriptive statistics (means,standard deviations),hypothesis testing (t-tests,ANOVA),regression modeling (linear,nonlinear,mixed-effects for repeated measures),principal component analysis for dimensionality reduction,Bayesian inference for parameter estimation with uncertainty,and Monte Carlo for uncertainty propagation. Power analysis and sample-size calculations are necessary to ensure sufficient sensitivity.

8. How is repeatability and reproducibility assessed?

Answer: Repeatability (same operator, equipment, conditions) is measured by within-session variance and intraclass correlation coefficients. Reproducibility (different operators, labs, or equipment) is assessed via inter-lab comparisons, Bland-Altman plots, and measurement system analysis (MSA). Reporting standard deviations, confidence intervals, and coefficients of variation for key metrics is standard practice.

9. How are measurement uncertainties handled and reported?

Answer: Uncertainties are quantified via statistical estimators (standard error, 95% confidence intervals) and propagated through models (e.g., via error propagation or Monte Carlo sampling). Systematic biases (e.g., instrument calibration drift) are estimated using calibration standards and corrected or reported. Transparency requires reporting instrument resolutions, calibration methods, and environmental controls.

10. What are common design trade-offs quantified by this analysis?

Answer: Trade-offs include distance versus accuracy (maximizing ball speed and launch for distance can increase dispersion), forgiveness versus workability (higher MOI improves off-center forgiveness but can reduce ability to shape shots), feel versus energy transfer (damping and shaft bend profiles affect feedback and ball speed), and COR versus durability and regulation compliance. Quantitative testing helps quantify marginal gains and where diminishing returns occur.

11. How is shaft dynamic behavior characterized and why does it matter?

Answer: Shaft behavior is characterized by static flex profiles, modal frequencies (natural frequency/frequency spectrum), torque, tip stiffness, and damping. Measured via accelerometers, modal analysis, and frequency-response testing, these properties influence timing of clubhead release, effective loft at impact, and feel. Quantitative characterization links shaft dynamics to launch conditions and shot dispersion.

12.How is grip ergonomics evaluated quantitatively?

Answer: Grip ergonomics are assessed by measuring hand pressure distributions (pressure-mapping sensors), grip circumference and taper, slip resistance (coefficient of friction), and muscle activation patterns via EMG during swings. These metrics can be correlated with consistency of release, shaft loading, and shot dispersion.13. How are off-center impacts and forgiveness quantified?

Answer: Forgiveness is often quantified by measuring performance metrics (ball speed, launch/spin, carry) as a function of impact offset from the clubface center. Sensitivity metrics include percentage loss in ball speed per mm of offset, change in spin rate, and dispersion increase. MOI and CG location are correlated with these sensitivity curves to define forgiveness indices.

14. How do environmental factors affect measurements and how are they controlled?

Answer: Temperature affects ball properties and material compliance; altitude affects air density and aerodynamic forces; wind introduces bias and variance. Controlled indoor facilities mitigate wind and allow temperature control; outdoor testing requires atmospheric measurements and corrections (air density) or statistical modeling to remove environmental effects.

15.How do regulatory constraints (USGA/R&A) enter the analysis?

Answer: Regulations constrain allowable COR, size, shape, and spring-like properties of clubs and balls. Quantitative analysis must include COR measurement, velocity tests, and confirm compliance with governing-body limits. design optimization is therefore constrained by regulatory ceilings, requiring trade-off analyses within legal bounds.

16. How are results translated into practical recommendations for players and designers?

Answer: For players,quantitative outputs are translated into fitment guidance (optimal loft,shaft flex,length,grip size) and predicted distributions of distance and dispersion under their swing profile. For designers, sensitivity analyses identify which geometric or material changes yield the greatest performance gains, and optimization frameworks (multi-objective) balance competing goals.

17. What role do simulation and optimization tools play?

answer: Simulation (FEA, CFD, multibody, and flight trajectory models) allows exploration of design space without expensive prototyping. optimization algorithms (gradient-based, genetic algorithms, multi-objective Pareto optimization) search for designs that maximize desired metrics subject to constraints (regulation, manufacturing limits, cost). Simulations must be validated against empirical tests.

18. What are limitations and common sources of error in quantitative equipment studies?

Answer: Limitations include model error (simplified aerodynamics),measurement error (instrument resolution and calibration),unmodeled human variability,sample size constraints,and manufacturing tolerances. Overfitting to lab conditions can produce designs that perform suboptimally in varied real-world contexts.Transparent reporting of limitations and uncertainty is essential.

19. How should researchers design experiments to be scientifically rigorous?

Answer: Use randomized and repeated measures where appropriate,pre-register hypotheses if possible,perform power analyses to set sample sizes,include control conditions and calibration standards,blind analysts to reduce bias,and report raw data,processing steps,and uncertainty measures. Use mixed-effects models to account for within-player and between-player variability when testing with humans.

20. What are promising directions for future quantitative research in golf equipment?

answer: Promising directions include coupling high-fidelity CFD and FEA with machine-learning surrogate models to accelerate optimization, in-situ sensing of clubs and balls for large-scale field data collection, personalized optimization using player-specific biomechanical models, improved multi-scale material models for novel composites, and probabilistic frameworks that explicitly quantify performance distributions and injury risks.

References and foundational concepts:

– General definitions of quantitative analysis and contrast with qualitative methods can be found in standard references on research methodology [1-4].

to sum up

In sum, the quantitative analysis of golf equipment performance offers a rigorous, empirically grounded pathway for understanding and optimizing the interaction between club technology and player biomechanics.By privileging numerical measurement, controlled experimental design, and statistical inference, such analyses move equipment evaluation beyond anecdote and marketing claims toward replicable, theory-driven insight. Metrics drawn from ball-flight data, launch monitors, material testing, and player kinematics-when analyzed with appropriate models and error quantification-enable nuanced comparisons across head designs, shaft dynamics, and grip configurations.

Despite its strengths, a quantitative framework also demands careful attention to validity, reliability, and generalizability: studies must control confounds, report uncertainty, and situate findings within the diversity of player skill levels and swing styles. Future work should continue to integrate high-fidelity measurement technologies, multivariate statistical methods, and predictive modeling (including machine learning where appropriate) to refine performance estimates and to translate laboratory findings into on-course outcomes. collaboration among researchers, manufacturers, coaches, and athletes will be essential to ensure that analytic advances produce practical, ethically sound improvements in equipment design and selection.

Ultimately, adopting a quantitative, evidence-based approach provides stakeholders with a robust foundation for decision-making-informing manufacturers’ design choices, guiding coaches’ recommendations, and empowering golfers to select equipment that measurably enhances performance. Continued commitment to methodological transparency and interdisciplinary inquiry will accelerate progress toward more precise, personalized, and performance-optimized golf equipment.

Quantitative Analysis of Golf Equipment Performance

Why quantitative analysis matters for golf equipment, clubs and golf balls

Objective, data-driven testing separates marketing claims from real-world performance. quantitative analysis helps golfers, club fitters, and engineers measure how a driver, shaft, iron, or golf ball affects key outcomes: carry distance, accuracy, spin, and repeatability.using numerical data and statistical methods-core to quantitative research frameworks-ensures findings are reproducible and actionable (see quantitative research principles from academic sources).

Key performance metrics every fitter and golfer should know

- Clubhead speed (mph or m/s): primary driver of potential distance.

- Ball speed: energy transfer from club to ball; drives carry and total yards.

- Smash factor: ball speed ÷ clubhead speed; efficiency metric.

- Launch angle: degrees at impact; interacts with spin to determine carry.

- Back spin / Side spin (rpm): affects height and curvature.

- Total spin: combined effect impacting stopping power and roll.

- Carry & total distance: practical outcomes on the course.

- Attack angle and face angle: influence spin and shot shape.

- MOI (Moment of Inertia) & forgiveness: resistance to twisting on off-center hits.

- Coefficient of Restitution (COR): related to ball speed and legal limits.

Data sources and measurement tools

Accurate quantitative analysis depends on reliable sensors and consistent methodology:

- Launch monitors: Doppler (TrackMan, FlightScope) and photometric systems (GCQuad). provide ball speed, launch, spin, carry, spin axis and more.

- High-speed cameras: verify impact location and face deformation.

- Impact testing rigs: repeatable robot strikes for material and clubhead testing.

- Biomechanics sensors: wearable IMUs and motion capture for swing kinematics.

- Laboratory tests: materials testing (tensile, fatigue), CG mapping, and finite-element analysis (FEA) for face dynamics.

- Environmental sensors: temperature,humidity and wind to normalize results.

Quantitative research methods & statistical modeling

Quantitative research principles (numerical data collection, controlled experimentation, and statistical inference) underpin rigorous equipment evaluation. Resources on quantitative methodology provide useful frameworks for hypothesis testing and data analysis.

Common statistical approaches used in golf-equipment testing:

- Descriptive statistics: means, standard deviations, confidence intervals for ball speed, carry.

- Regression models: predict carry distance from clubhead speed, launch, and spin.

- Mixed-effects models: separate player-to-player variability from equipment effects.

- ANOVA / ANCOVA: compare multiple clubs/shafts while controlling covariates (e.g., clubhead speed).

- PCA & clustering: identify patterns among shafts or balls (grouping by behavior).

- Monte Carlo simulations: model on-course distributions given measured shot dispersion and wind.

Tools commonly used: Python (pandas, scikit-learn, statsmodels), R (lme4, ggplot2) and specialized fitting suites in launch monitor software.

How to design a controlled equipment test (step-by-step)

- Define the research question (e.g., “Does a stiffer shaft increase carry distance for a 95-105 mph player?”).

- Select metrics to measure (ball speed, launch, spin, carry, dispersion).

- Choose equipment and randomize order to avoid bias (rotate clubs between testers).

- Standardize the habitat: indoor bay, same tee height, ball model, temperature control if possible.

- collect a sufficient sample size per condition-typical bench tests use 20-50 shots per club; mixed-model field tests may need more depending on variability.

- Use impact tape or high-speed imaging to verify contact location and exclude mis-hits.

- Clean and normalize the data (remove outliers based on objective criteria, such as >3 SD from mean).

- Perform statistical testing and report effect sizes,not just p-values-include confidence intervals.

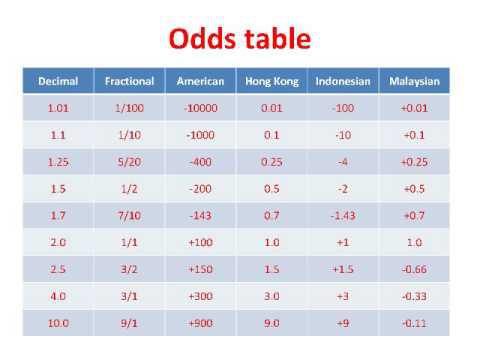

Case study: Driver shaft stiffness vs.carry distance (hypothetical)

Small controlled test: 10 golfers with 5 shots each using three shaft flexes (Regular,Stiff,Extra Stiff). Average measured values below:

| Metric | Regular | Stiff | Extra Stiff |

|---|---|---|---|

| Avg. clubhead speed (mph) | 96 | 98 | 99 |

| Avg. ball speed (mph) | 139 | 142 | 142 |

| Avg. launch angle (°) | 11.5 | 11.0 | 10.4 |

| Avg.carry (yds) | 245 | 251 | 249 |

Analysis summary: Stiff shafts showed a small but measurable increase in ball speed and carry for this player group, likely due to reduced energy loss in shaft deflection and a slightly higher smash factor.however, extra stiff showed diminishing returns and slightly lower launch, reducing carry for some players. Mixed-effects modeling would confirm whether these differences are statistically meaningful after controlling for player variability.

Benefits and practical tips for golfers, fitters and engineers

- Use objective metrics to match equipment to swing profile-don’t rely solely on feel.

- When testing, keep the golf ball model consistent: ball construction affects spin and launch.

- Document environmental conditions to compare sessions across days.

- combine sensor data and video to validate unusual results (e.g., unexpected spin spikes).

- For amateurs, a small increase in smash factor or 2-3% increase in ball speed can translate into meaningful yardage gains.

- understand trade-offs: more forgiveness (higher MOI) may reduce peak ball speed slightly but increase average performance across off-center hits.

Common pitfalls & how to avoid them

- Small sample sizes: leads to overfitting and misleading results-collect adequate shots per condition.

- Ignoring player variability: use mixed models or paired designs to isolate equipment effects.

- Confounding variables: change only one variable at a time (e.g., shaft only, not head and ball simultaneously).

- Over-reliance on single-shot best distance: use averages and dispersion metrics to quantify consistency.

- Not validating sensors: periodically calibrate launch monitors and verify against a trusted reference.

First-hand experience: what happens at a professional fitting session

During a quality club-fitting session you’ll typically see a sequence:

- swing and baseline measurement with the player’s current driver/iron/ball.

- Series of controlled swings with different shafts, lofts and head designs while tracking launch monitor data.

- Subjective feedback combined with objective metrics-look for the best balance of ball speed, launch, spin and dispersion.

- On-course validation for real-world feel-sometimes small indoor gains don’t fully translate to outdoor play due to wind and lie conditions.

Recommended tools, software and datasets

- Launch monitors: TrackMan, FlightScope, skytrak+, GCQuad.

- Software: Python (pandas, scikit-learn), R (lme4), Excel for quick analysis.

- Video and high-speed cameras: 1,000+ fps to inspect impact.

- Data logging: store raw sensor streams and annotated notes on each session for reproducibility.

Quick reference: typical ranges for recreational to low-handicap players

| Player type | Clubhead speed (mph) | Carry with driver (yds) |

|---|---|---|

| Recreational | 80-95 | 180-230 |

| Club-level / good amateur | 96-105 | 230-260 |

| Low handicap / elite amateur | 106-115+ | 260-300+ |

Bringing it together: reproducible, data-driven advancement

Quantitative analysis of golf equipment performance bridges engineering, biomechanics and statistics. By following controlled testing methods rooted in quantitative research principles and using robust statistical techniques, fitters and players can make confident equipment choices that improve distance, accuracy and consistency on the course.

References & further reading: Introductory materials on quantitative research methods can be found at academic library guides and encyclopedic resources that describe numerical data collection and analysis frameworks.