Contemporary competitive and recreational golf increasingly relies on systematic analysis to translate heterogeneous player skills and diverse course architectures into predictable scoring outcomes.Variability in shot-making,course setup,and environmental conditions creates a complex decision space in which isolated metrics-such as driving distance or putting average-fail to capture the interactions that determine round-to-round performance.Establishing an analytical framework that integrates hole-by-hole scorecard analysis, shot-level telemetry, and course-feature quantification is therefore essential for both diagnosing limiting factors and prescribing effective strategic adjustments.

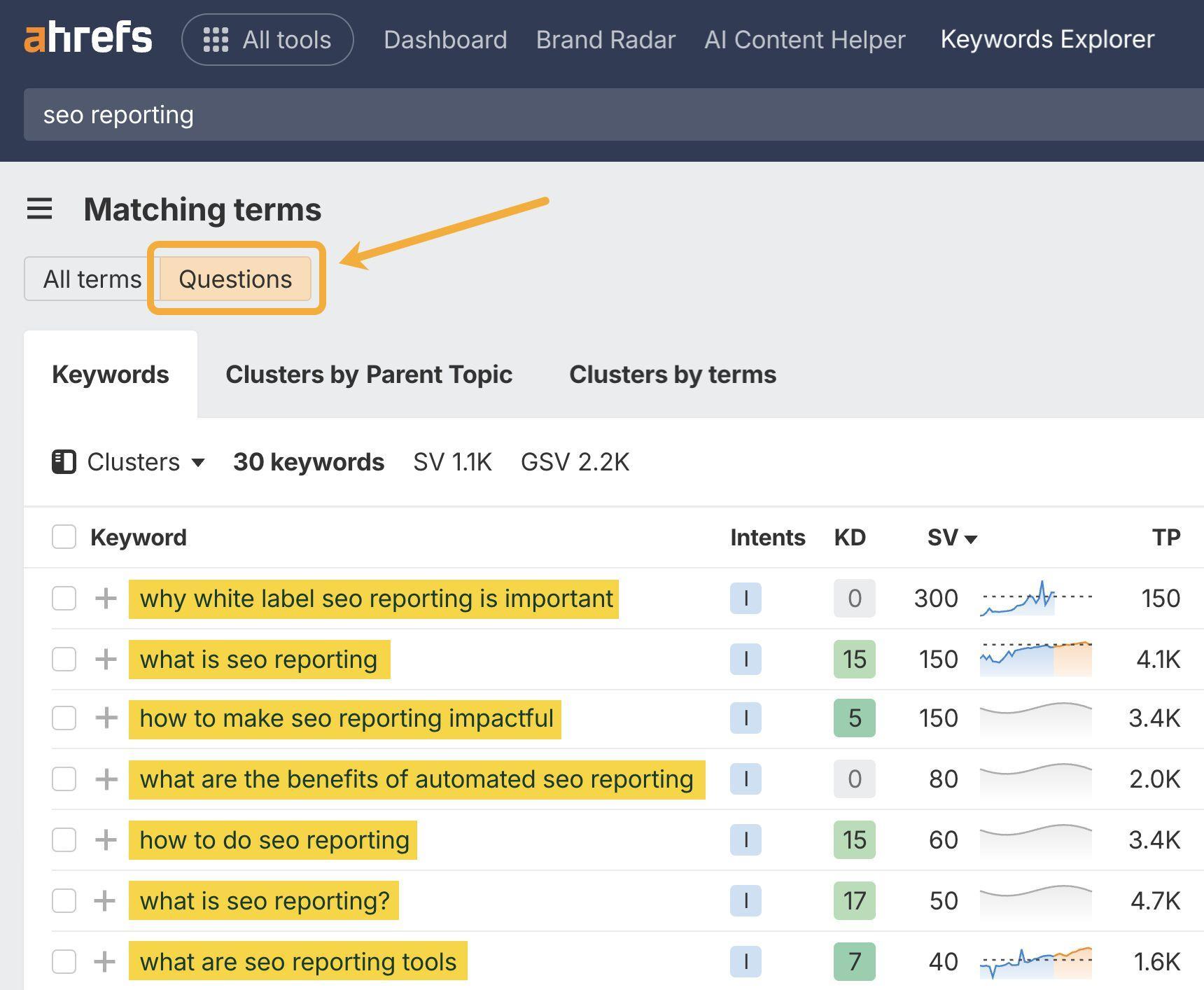

This article synthesizes methodological advances from scorecard analytics, performance-monitoring technologies, and statistical modeling to construct a coherent decision-support structure for golfers and coaches. Techniques range from conventional scorecard breakdowns and target-centered priority-setting to more granular data sources provided by launch monitors and camera systems (e.g., GC2) and by platform-based analytic tools (e.g., Golfmetrics). Statistical packages and visualization tools (such as those used in Statgraphics) enable rigorous assessment of variance, correlation, and situational risk, permitting translation of raw measurements into actionable insights.

The proposed framework emphasizes three linked components: (1) precise measurement and classification of course and shot contexts; (2) quantification of individual player strengths, weaknesses, and variance profiles; and (3) prescriptive strategy generation that aligns practice emphases and on-course decision rules with measurable scoring objectives. By connecting diagnostic analytics to explicit management strategies, this approach aims to convert descriptive metrics into targeted interventions that yield measurable performance gains across practice and competitive settings.

Theoretical Foundations of Golf Scoring Models and representation of Player Ability

Contemporary scoring models treat a round of golf as a sequence of stochastic events whose aggregate produces the observed score; central to this view is the concept of expected score as an objective to be minimized. Analytical treatments increasingly combine course-feature representations (yardage,hazard geometry,green size) with probabilistic shot-outcome distributions to predict outcomes at the hole and round levels. Seminal formulations emphasize that optimal strategy is endogenous to a player’s shot pattern: the same course layout yields different optimal choices for players with different dispersion and success probabilities. This probabilistic framing permits formal comparisons of conservative versus aggressive play through expected-value calculations and variance-aware criteria.

Representation of a player’s proficiency is most coherently posed as estimation of latent ability parameters that govern shot distributions across contexts (tee, approach, short game, putting). Practical estimators-most familiarly the handicap-provide an empirical aggregate but conflate multiple skill dimensions.A more granular representation decomposes ability into orthogonal components such as:

- Distance and dispersion (mean carry/roll and lateral/longitudinal variance);

- Greens-in-regulation propensity (contextualized by approach length and lie);

- Short-game conversion (up-and-down success rates from various radii);

- putting efficiency (make percentages by distance bands).

Estimating these components enables model-based simulation of rounds and robust comparisons across courses and conditions.

Analytically useful model classes include deterministic heuristics, parametric statistical models, and dynamic decision models that embed conditional probabilities of success. Representative distinctions can be summarized concisely in a small reference table below:

| Model class | Key assumption | Primary use |

|---|---|---|

| Parametric stochastic | Shot outcomes drawn from fitted distributions | Predictive scoring, variance estimates |

| markov / sequential | State transitions depend only on current lie/position | Hole-level strategy & decision analysis |

| Decision-theoretic (risk-aware) | Players optimize expected utility (E[S] ± risk) | Risk-reward shot selection |

Theoretical constructs have direct operational implications: models must be calibrated with context-rich data (shot-level outcomes, lie, wind, green speed) and validated out-of-sample to inform reliable strategy. for practitioners, model outputs translate into actionable targets and management rules-examples include preferred landing zones, club-selection thresholds, and when to prioritize variance reduction over low-mean strategies. To support this, collect core metrics such as:

- shot location and dispersion by club;

- approach-to-green proximity bands;

- up-and-down conversion rates by radius;

- putt make probabilities by distance and surface.

These disciplined measurements enable both individualized model fitting and principled goal-setting grounded in the theoretical foundations described above.

Quantifying Course Characteristics and Their Differential Effects on Scoring Outcomes

High-resolution quantification of course attributes is the prerequisite for any rigorous analysis of scoring outcomes. Key variables include **hole length**, **fairway width**, **green complexity (undulation and contour variance)**, **rough height**, **hazard frequency and proximity**, **elevation change**, and **wind exposure**; these can be measured with GPS telemetry, LiDAR-derived surface models, and on-course sensors. Data collection should pair course metrics with shot-level logs and player covariates (club used, lie, pin position, player handicap, and weather at time of play). Note: the set of provided web search results dealt with digital learning services and did not supply golf-specific data; therefore they where not incorporated into the empirical descriptors below.

From an inferential viewpoint, a layered modeling strategy improves interpretability and predictive power. Primary approaches include **mixed‑effects regression** to account for repeated measures by player and hole, **principal component analysis** to reduce correlated course variables into orthogonal factors (e.g., a “length/targeting” factor vs. a “green/short-game” factor), and **quantile regression** to explore how course features impact different parts of the scoring distribution. Recommended model specifications: include hole-level random intercepts, interaction terms between player skill and feature scores, and cross‑validation for out‑of‑sample assessment. Examples of candidate model inputs are provided below in concise form.

| Feature | Avg Player Impact (strokes) | low‑Handicap Impact (strokes) |

|---|---|---|

| Long Hole (+50 yds) | +0.35 | +0.10 |

| Narrow Fairway (-10 m) | +0.28 | +0.05 |

| Complex Green (high contour) | +0.22 | +0.18 |

| Deep Rough (+15 cm) | +0.40 | +0.12 |

these illustrative estimates demonstrate differential sensitivity: **average players** suffer disproportionately from length and rough, whereas **low‑handicap players** register relatively higher sensitivity to green complexity due to birdie conversion dynamics.

Translating quantified effects into practice and strategy requires explicit prioritization. Use the following operational rules, calibrated to estimated feature impact:

- Practice allocation: allocate time to the component with the highest modeled marginal strokes saved (e.g., approach and short game when green complexity explains >15% of variance).

- Pre‑round strategy: when wind exposure or narrow corridors are meaningful, favor conservative targets that minimize dispersion penalties.

- Shot selection heuristics: incorporate expected-strokes maps into decision trees-choose layups when expected strokes for aggressive play exceed conservative play by >0.15 strokes.

By integrating quantified course effects with player-specific response functions, coaches and players can set realistic goals, prioritize interventions, and make evidence‑based on‑course decisions that systematically reduce scoring variance.

Modeling Player skill: Shot Dispersion, Club Selection, and Risk Preferences

Quantitative characterization of a player’s shot-making begins with a parametric representation of lateral and longitudinal variability. Using high-resolution tracking datasets, a bivariate dispersion model (e.g., elliptical Gaussian or kernel density estimate) can capture the **mean carry distance**, **standard deviation in carry**, and the **covariance** between lateral and distance errors. Table-driven summaries facilitate both interpretation and downstream simulation: they provide concise priors for optimization and are easily updated as new rounds are observed.

| Metric | Definition | Example |

|---|---|---|

| Mean carry | Average ball flight distance (yd) | 230 |

| Carry SD | Standard deviation of carry (yd) | 18 |

| Lateral SD | Std deviation perpendicular to target (yd) | 14 |

Translating dispersion into club choice requires an expectation-driven framework that accounts for both distance and outcome variability. decision rules should maximize a target metric-commonly **expected strokes gained** or expected score-conditional on lie, wind, and hazard geometry. Practical decision support models incorporate:

- expected distance and dispersion per club,

- probability of finding preferred landing zone,

- penalty severity and recovery-cost distributions,

- player-specific execution probabilities under pressure.

These components permit dynamic selection strategies that change by hole segment and tournament context.

Preference for risk is formalized through utility constructs that modulate raw expectation in decision making. A risk-neutral policy maximizes mean outcome, whereas risk-averse players may optimize a concave utility or adopt mean-variance trade-offs to reduce downside exposure (e.g., avoiding forced carries or penal edges). Empirically estimating a player’s risk parameter-via observed deviations from EV-maximizing choices or via stated-preference experiments-enables personalized recommendations such as when to adopt an aggressive line into a reachable green or when to prioritize positional safety.

Integrating dispersion, club-level expectations, and risk preference yields a full probabilistic simulator used for both pre-round planning and long-term skill allocation. Monte carlo simulation and dynamic programming can identify the marginal value of reducing specific error components (e.g., decreasing lateral SD vs. increasing mean carry) and thereby prioritize practice interventions. For model robustness, employ bayesian updating to refine parameters after each round, and report key diagnostics (calibration, Brier score, decision regret) so coaches and players can track betterment against **objective, risk-adjusted criteria**.

Strategic Decision Framework for Risk Reward tradeoffs and Optimal Shot Selection

The decision architecture treats each shot as a probabilistic action that maps a chosen strategy to a distribution of terminal states on and around the hole. By framing shot selection in terms of expected strokes and the higher moments of the outcome distribution (notably variance and skew), practitioners can quantify when a lower-expectation but higher-variance play is justified. The core analytical device is an action-value function E[V(a | s)] that conditions on state s (lie, wind, angle, hole location) and returns both the mean score impact and a calibrated risk penalty reflecting the player’s risk preference and match context.

Operationalizing that function requires a concise set of inputs and transformation rules.Critical state variables include distance-to-target, surface firmness, prevailing wind vector, obstacle geometry and the player’s empirical dispersion model for the chosen club. The decision engine translates these into:

- Predicted proximity distribution (meters/feet to hole)

- Probability of penalizing outcome (water/bunker/out-of-bounds)

- Recovery cost expectation (strokes-to-regain parity)

tradeoff assessment proceeds by combining the value and risk outputs with an objective function U = E[−strokes] − λ·Var(strokes), where λ denotes the player’s risk aversion coefficient. This yields clear policy contours: a low λ (aggressive preference) expands the set of states where high-variance plays (e.g., driver off the tee to carry hazard) maximize U, while a high λ (conservative preference) contracts it toward safe-play corridors. The following compact decision taxonomy summarizes typical strategic classes and their operational signature.

| Strategy | Expected Value (EV) | Variance | Typical Use Case |

|---|---|---|---|

| conservative | Moderate | Low | Protect lead, penalized holes |

| Balanced | High | Moderate | Normal play, mixed risk |

| Aggressive | Highest | High | Need birdie, short par-5s |

implementation requires iterative calibration: collect shot-level data, estimate the player-specific dispersion and recovery-cost functions, and update λ based on situational psychology (tournament vs recreational, match play vs stroke play). Practical heuristics derived from the model include: prefer conservative lines when penalty probability exceeds recovery expectation, and default to aggressive lines only when the marginal EV gain exceeds λ·ΔVar.Embedding these rules in pre-round checklists and on-course decision aids converts the theoretical framework into measurable performance improvements.

Metrics for Course Management Performance and In Play Decision making

Contemporary analysis treats metrics not as isolated statistics but as an integrated information architecture that links measurable outcomes to in-play choices.In this framework, metrics serve four functional roles: establishing a performance baseline, describing situational state, estimating environmental effect, and quantifying decision quality.

- Baseline metrics – season or round averages used as priors;

- Situational metrics – shot- and hole-level measures that vary by context;

- Environmental metrics – wind,lie,and green-speed adjustments;

- Decision-quality metrics – expected-value and variance measures that evaluate choices.

Treating metrics as components of decision models makes them actionable in real time rather than merely descriptive after the fact.

At the player level, certain metrics have proven high utility for course-management optimization. Examples include Strokes Gained subcategories (off‑tee,approach,around‑green,putting),proximity to hole from varying distances,GIR%,and penalty rate. These inputs feed predictive models that convert shot outcomes into expected scores and risk profiles.The simple table below summarizes representative metrics, their predictive focus, and a direct in-play use-case.

| metric | Predictive Focus | In-play Use |

|---|---|---|

| Strokes Gained: Approach | Proximity → score | Club selection to minimize expected strokes |

| Penalty Rate | Downside variance | Avoid aggressive lines on tight holes |

| Wind-Adjusted Expectancy | Shot carry & dispersion | Alter target zone and landing area |

Course-level and situational indicators complement player metrics by structuring the decision space around hole architecture and external conditions. Relevant constructs include a Risk‑Reward index for each hole (comparing upside in strokes saved vs. downside in penalty probability), a Decision‑Quality Index (DQI) that scores choices relative to modelled optimal play, and exposure maps that identify where small errors produce large score swings. Practically, these are implemented by computing risk‑adjusted expected value and the second moment (variance) for candidate shots; decisions then follow simple rules such as selecting the option with the highest risk‑adjusted EV or the lowest downside variance when protection is prioritized.

Translating metrics into on-course behavior requires operational protocols and rapid analytic feedback loops. Teams should adopt a short pre-shot checklist tied to metric thresholds (e.g., opt for conservative play when penalty probability > X% or when wind-adjusted dispersion exceeds the safe landing window). A minimal in-play workflow:

- Query current situational metrics (lie, wind, distance, hole state);

- Compute candidate-shot EV and downside risk;

- Select shot per policy (max EV or protected EV);

- Record outcome to update priors.

Embedding this loop in practice builds calibrated judgment and makes metrics a live instrument for superior course management and decision making.

Statistical Methods for Estimation, Validation, and Predictive Assessment of Scoring Models

Contemporary estimation of scoring models begins with explicit specification of the data-generating process and proceeds through parametric and nonparametric approaches.Maximum likelihood estimation and generalized linear models remain workhorses for shot- and hole-level score prediction, while **Bayesian hierarchical models** provide a principled framework to pool information across players, rounds, and courses and to quantify uncertainty in player-level effects. Regularization techniques (Ridge, Lasso, and Elastic Net) are applied when high-dimensional feature sets-club-by-distance interactions, lie conditions, wind vectors-introduce multicollinearity or overfitting risk. Wherever possible, practitioners should anchor models in domain knowledge (e.g., strokes-gained principles) and treat statistical analysis as an iterative process of model refinement, informed by both global fit and local residual structure.

Robust validation is essential to move from explanatory fits to reliable predictions. Standard approaches include k-fold cross-validation and bootstrap resampling, but the temporal and hierarchical nature of golf data frequently enough calls for specialized strategies such as rolling-origin (time-series) validation and nested cross-validation for hyperparameter tuning. Model comparison should combine information-criteria (AIC/BIC) with out-of-sample predictive performance to avoid favoring overly complex models. Typical validation checks include:

- Calibration plots (predicted vs observed strokes) to detect systematic bias;

- Residual diagnostics (heteroskedasticity, autocorrelation by round) to assess model assumptions;

- Player- and course-level holdouts to test generalizability when transferring models across competitive contexts.

Predictive assessment relies on an ensemble of metrics that reflect both accuracy and decision relevance. Use point-error metrics (RMSE, MAE) for continuous score prediction, probabilistic scores (log loss, Brier score) when modeling event probabilities (e.g., making the cut), and ranking metrics (Spearman rho, Kendall’s tau) when relative ordering of players is the objective.A concise reference table aids selection:

| Metric | Use | Direction |

|---|---|---|

| RMSE | Point prediction error (strokes) | Lower better |

| Brier score | Probability calibration for binary events | Lower better |

| Calibration slope | Bias and over/under-confidence | Closer to 1 ideal |

To translate statistical outputs into strategic guidance, integrate predictive models with decision-theoretic layers: expected-value-of-shot choices, Monte Carlo course simulations, and value-of-information analyses for practice allocation. Emphasize obvious uncertainty communication-posterior intervals, predictive envelopes, scenario bands-and produce compact, actionable summaries for coaches and players. Recommended reporting items include:

- out-of-sample performance table (metrics + sample sizes);

- Calibration and residual plots annotated with course/round strata;

- player-specific effect estimates with credible intervals and practical implications.

Practical Recommendations for Coaches and Players: Training Interventions and Tactical Adjustments

adopt a data-frist coaching paradigm that translates analytical outputs into targeted practice plans. Prioritize measurable key performance indicators-such as proximity to hole, greens in regulation, and scramble percentage-when designing sessions. Use session objectives that explicitly map to these KPIs and allocate practice time according to marginal gains: invest disproportionately in areas where predicted score improvement per hour is greatest. Emphasize reproducible assessment protocols (standardized lie, wind, and target conditions) so longitudinal comparisons reflect true player development rather than measurement noise.

Construct tactical frameworks grounded in course and player analytics to reduce variance on competition days. implement pre-round decision rules based on hole-by-hole expected value calculations and a player’s shot-dispersion model. Recommended tactical adjustments include:

- Target-shift rules: bias aim points toward larger miss zones when dispersion increases (e.g., wind, fatigue).

- Lay-up thresholds: define explicit distance/score thresholds where a conservative play maximizes expected score.

- Short-game prioritization: increase green-side practice weight for players whose analytics show high penalty from missed GIRs.

Prescribe training interventions that integrate motor learning and ecological validity. Combine blocked and variable practice phases, using progressive contextual interference to transfer to competition. Incorporate pressure simulations (time constraints, scoring incentives) to build robustness under stress, and complement skill work with targeted physical conditioning that reduces performance degradation late in rounds. The compact table below summarizes concise pairings of diagnostic metric and recommended intervention for rapid operational use.

| Metric | Diagnostic Threshold | Recommended Intervention |

|---|---|---|

| Proximity | > 35 ft avg | Deliberate wedge rangework |

| Scrambling | < 40% | Green-side bunker & chip circuits |

| Driving Dispersion | SD > 20 yd | Video-assisted swing stabilization |

Embed iterative monitoring and clear communication routines to ensure fidelity of implementation. Use short-cycle evaluation (microcycles of 2-4 weeks) to update priors,and present results in concise dashboards that highlight retained gains and emerging deficits. Foster a collaborative coach-player dialog that frames tactical adherence as a measurable experiment-agree on decision rules,implement them in low-stakes events,and only generalize when empirical evidence supports the change. set phased SMART targets tied to the KPIs used in practice so both tactical and technical adjustments remain aligned with realistic performance trajectories.

Q&A

Below is a professional, academically styled Q&A intended to accompany an article titled “Analytical frameworks for Golf Scoring and Performance.” Each question is followed by a focused, evidence‑based answer that synthesizes established metrics, practical measurement methods, and strategic implications for players, coaches, and researchers. References to background sources are noted in brackets.

1) What do we mean by an “analytical framework” for golf scoring and performance?

Answer: An analytical framework is an explicit, replicable set of concepts, metrics, data‑collection procedures, and statistical models that link observable course features and player actions to scoring outcomes. Such a framework organizes shot‑level data (e.g., club, lie, distance to target, shot outcome) and course attributes (hole length, hazard locations, green size and undulation, rough and recovery areas, prevailing wind) into models that estimate the contribution of different elements (long game, short game, putting, strategy) to total score and identify optimal decisions under uncertainty. Frameworks range from descriptive (score decomposition) to inferential (strokes‑gained,value‑of‑a‑shot) and prescriptive (decision models,expected‑value shot choice) approaches.

2) What are the principal, widely used performance metrics in modern analysis?

Answer: Key metrics include:

– Strokes‑gained (total and by phase: off‑the‑tee, approach, around the green, putting), which quantifies performance relative to a baseline population.

– Proximity to hole (average distance on approach and around‑the‑green), which proxies shot quality.

– Scrambling and sand save percentages for short game effectiveness.

– Shot value or “total shot value” measures that translate each shot into expected impact on score (as in golfmetrics approaches) [1].

– Scorecard decomposition by hole/par type and by shot segment (drive, approach, chip, putt).These metrics are complemented by course metrics (effective hole length, penalization density, green complexity) that modify expected outcomes.3) How do we decompose scoring differences between player cohorts (e.g., tour professionals vs. amateurs)?

Answer: Decomposition is achieved by attributing per‑shot value across shot types and summing by phase. Studies using shot‑value frameworks show that the largest contributions to score gaps often come from putts and short‑game shots, but the relative importance can vary by skill band and course set‑up [1]. A thorough decomposition requires large samples of shot‑level data and careful baseline selection (peer group or course average) to avoid attribution bias.4) Which modeling approaches are most appropriate for linking course characteristics to scoring?

Answer: appropriate approaches include:

– Generalized linear mixed models (GLMMs) to account for repeated measures and random effects (player, course, hole).

– Hierarchical Bayesian models to incorporate prior knowledge and quantify uncertainty in shot outcomes.

- Expected value and decision‑theoretic models for risk‑reward tradeoffs on individual shots.

– Simulation and Monte Carlo methods to estimate round‑level distributions given stochastic shot outcomes.

– Machine‑learning models (e.g., gradient boosting) for predictive tasks, though these require careful interpretation when used for causal inference.

5) How should analysts model strategic shot selection and risk‑reward tradeoffs?

Answer: Strategic modeling combines estimates of the distribution of shot outcomes for different shot options with the scoring consequences (expected strokes).For each choice (conservative vs. aggressive line or club), compute the expected value (EV) of the resulting score distribution and consider variance and downside risk. decision rules can incorporate utility functions (player‑specific risk preferences) or tournament context (match play vs. stroke play, weather, leaderboard). Robust estimation of shot outcome distributions requires past shot data from similar lies and conditions.

6) What data are required to implement these frameworks reliably?

Answer: Minimum useful data:

- Shot‑level records with club selection, lie, start/end coordinates, distance and dispersion to target, and outcome type (fairway, green, hazard, etc.).

– Hole and round context: hole par, yardage, hazards, green size/contour, tee placement, pin location, wind and weather, hole sequence.

– Player identifiers and handicap/tour status.

Tools for data collection include shot‑tracking systems, launch monitors, smart cameras (e.g., GC2‑type systems) and manual scorecard + GPS tagging for lower‑budget implementations [3]. More comprehensive analyses use shot‑tracking APIs from commercial providers or detailed manual coding.

7) how can course‑management skills be measured and quantified?

Answer: Course management can be operationalized via metrics that capture decision quality and execution relative to a model of optimal play:

– Deviation from EV‑optimal shot choice given lie and conditions (frequency and magnitude).

– “Opportunity management” metrics: percentage of holes where a player converts expected birdie opportunities or preserves pars under pressure.

– Expected strokes saved/lost relative to baseline due to strategic choices (e.g., laying up vs. going for the green).

- Situational statistics (performance on risk‑reward holes, recovery from hazards).

These metrics require an explicit decision model or a normative benchmark to evaluate choices [2].

8) How do measurement and model choices affect interpretability and prescriptive value?

Answer: Predictive models (black‑box machine learning) may yield strong accuracy but limited causal insight; they are useful for forecasting outcomes but less for prescribing strategy. Structural and decision‑theoretic models provide interpretable parameters (e.g., marginal value of 10 yards of approach distance) that support actionable recommendations. Analysts should report uncertainty, sensitivity to baseline selection, and ensure models are validated on out‑of‑sample rounds and varied course conditions.

9) what role do short game and putting play in scoring frameworks?

Answer: Short game and putting frequently account for a considerable portion of scoring variance-empirical decompositions show their outsized influence in separating skill levels [1]. Frameworks thus give them separate treatment: measure proximity after approach, up‑and‑down rates, putts per green in regulation, and strokes‑gained around the green and putting. interventions can then be targeted (practice allocation, tactical adjustments) based on which element contributes most to expected strokes.10) how should practitioners translate analytical findings into training and practice plans?

Answer: Use a priority matrix based on impact and current variance:

– Identify phases where expected strokes gained per unit of practice time is highest.

– Convert analytical priorities into specific drills (e.g., proximity drills, short‑game simulation, pressure putting).

– Use scorecard analysis to set measurable goals and track improvement over rounds; iterate frequently and validate with new shot data [2,3].

11) What practical tools and technologies are recommended for coaches and analysts?

Answer: Recommended technologies include shot‑tracking systems, launch monitors, smart cameras, and mobile apps that tag shots and map hole geometry. These provide the shot‑level granularity necessary for strokes‑gained and value‑of‑shot calculations. Low‑cost options (manual score + GPS tagging) can still produce useful insights if data collection is consistent and sufficiently detailed [3].12) What are common pitfalls and limitations when applying these frameworks?

Answer: Common pitfalls include:

– Small sample sizes leading to noisy estimates.

– Ignoring context (weather, pin placement, tee box) that materially affects expected outcomes.

– Overfitting models to idiosyncratic course setups or a single player’s tendencies.

– Misinterpreting correlation as causation when assessing practice impact on scoring.

– Failure to account for player risk preferences and psychological factors that influence decision execution.

13) How can future research improve analytical frameworks for golf?

Answer: Future improvements include:

– Integrating richer environmental data (wind fields, green contours) and wearable telemetry to capture biomechanics alongside outcomes.

– Developing robust causal inference methods to assess intervention effects (practice programs, equipment changes).

– Building individualized decision‑theoretic models that combine skill distributions with psychological risk profiles.

– Standardizing shot‑level datasets and benchmarks to facilitate cross‑study comparisons.

14) Where can readers find foundational and practical resources to apply these methods?

Answer: Foundational research and practical guides include academic shot‑value work and accessible coaching resources. For example, Broadie’s golfmetrics and shot‑value analyses provide rigorous decomposition methods and evidence on where score differences arise [1]. Practical scorecard analysis and translation into practice plans are discussed in coaching resources such as Target Centered Golf’s performance analysis guides [2], and reviews of performance analysis technologies summarize tools and techniques useful for implementation [3]. Basic descriptions of scoring formats and terminology are useful background for nontechnical readers [4].

References (selected):

– Broadie, M. “Assessing Golfer Performance Using Golfmetrics” (shot‑value and decomposition methods) [1].- Target Centered Golf,”Performance Metrics: Scorecard Analysis” (practical game‑analysis and practice translation) [2].

– Elite Golf of Colorado, “9 Golf Performance Analysis Techniques…” (tools and techniques overview) [3].

– Introductory material on scoring formats and terminology [4].

If useful, I can: (a) provide a worked example showing how to compute strokes‑gained components from a mock round, (b) sketch a simple expected‑value decision model for a typical par‑5 risk‑reward tee shot, or (c propose a minimal data schema and collection protocol for clubs wanting to begin shot‑level analysis. Which would you prefer?

Conclusion

This paper has proposed and illustrated analytical frameworks that connect course characteristics, player abilities, and in-round decision-making to observable scoring outcomes. By decomposing performance into situational shot-level choices, risk-reward tradeoffs, and measurable course-management metrics, the frameworks make explicit the pathways through which strategic behavior and inherent skill interact to produce score variance. Practically, these models enable coaches and players to quantify the expected value of alternative strategies, prioritize training interventions (e.g., proximity to hole vs. scrambling), and design course-specific game plans that align a player’s skill profile with prevailing hole and round conditions. Methodologically, the approach emphasizes transparent model structure, defensible assumptions about shot distributions and state transitions, and the use of robust, granular data to estimate parameters – an analytical rigor akin to empirical practices in other quantitative disciplines.

Limitations of the present work include dependence on data quality and representativeness, simplifying assumptions about player rationality and stochasticity, and potential unmodeled interactions between psychological factors and purely technical variables. Addressing these limitations will require richer longitudinal datasets, experimental or quasi-experimental validation of modeled interventions, and formal incorporation of time-varying and contextual moderators (e.g., wind, pressure moments). future research should also evaluate how aggregated course-management metrics perform as predictors of long-term scoring improvement across skill levels,and how adaptive decision-support tools based on the frameworks can be integrated into coaching workflows and on-course strategy.

In closing, adopting structured analytical frameworks for golf scoring and performance permits a more systematic, evidence-based approach to both in-round strategy and long-term player development. When combined with high-quality data and iterative validation, these frameworks have the potential to translate nuanced course and player information into actionable insights that improve scoring outcomes while preserving the strategic richness of the game.

Analytical Frameworks for Golf Scoring and Performance

Key Performance Metrics Every Golfer Should Track

To design an analytical framework that improves golf scoring and performance, start by tracking the right metrics.Below are the high-value KPIs (key performance indicators) that consistently correlate with lower scores and smarter course management.

- Strokes Gained (Off-the-Tee, Approach, Around-the-Green, putting) – provides direct, comparative value versus a baseline (often TOUR or club average).

- Fairways Hit / Driving Accuracy – helps quantify off-the-tee risk vs reward.

- greens in Regulation (GIR) – measures how often you reach scoring opportunities.

- Putts per Round and Putts per GIR – separates long-game from short-game performance.

- Scrambling / Up-and-down % – indicates resilience and short-game creativity when missed greens.

- Proximity to Hole (Approach Distance) – average distance from pin on approaches by club.

- Penalty Strokes / Lost Ball Rate - high-impact metric for risk management.

- Shot Dispersion / Accuracy – useful when paired with launch monitor data (carry,spin,lateral dispersion).

- Scoring Average / Par-Bogey Distribution – shows frequency of birdies, pars, bogeys, doubles.

designing an Analytical Framework: From Data to Decisions

A repeatable framework converts raw data into actionable strategy.Use these steps to build a practical system that helps you make smarter shot selections and set realistic advancement goals.

1. Define Your Baseline and Goals

- Collect 10-20 rounds to establish reliable averages (scoring average, GIR, driving accuracy, etc.).

- Set short-term (6-8 weeks) and long-term (6-12 months) targets – e.g., lower scoring average by 1.5 strokes,increase GIR by 6%.

- Choose a baseline for strokes gained (local club average or a TOUR composite if you want an aggressive benchmark).

2. Segment the Round into Decision Units

Treat each hole as a sequence of decisions (tee → Approach → Around-the-Green → Putting). Track outcomes by segment so you can assign obligation for strokes gained or lost.

3. Use Shot Value and Expected Value Models

Shot value charts estimate the expected strokes to hole out from various locations. Use these to decide between aggressive vs conservative lines and club choices. The expected value (EV) calculation should account for:

- Distance to hole and likely outcome (GIR probability).

- Hazards, rough, and recovery cost (penalty strokes or difficulty to get up-and-down).

- Your own historical performance from similar lies and distances.

Course Characteristics and Pre-Round Planning

Smart course management blends analytics with on-site observations. Before every round, evaluate the course features that will most affect scoring.

Course Factors to Map

- Hole-by-hole yardage and par – identify birdie holes and risk-reward par-4s.

- Fairway width and hazards – measure forgiveness; narrow fairways increase value of accuracy.

- Green size, slope, and speed – influences approach targets and putting strategy.

- Wind and elevation – frequently change club selection EVs.

- Course rating & slope – context for handicap and expected scoring difficulty.

Pre-Round Checklist

- Review hole-by-hole plan: landing zones,bailout areas,preferred angles into greens.

- Decide on “go/no-go” zones for driver usage based on value charts and fairway width.

- Set realistic scoring goals (e.g., “play to bogey” on windy holes, push for birdie on short par-5s).

- Pack a yardage card or digital map with common misses and recovery options highlighted.

Shot-Level Decision Making: Rules and heuristics

Analytics inform heuristics – simple rules you can use under pressure. These are practical, high-ROI decision rules to apply on course.

High-Value Heuristics

- When to Lay Up vs Go for It: Compare expected strokes for both strategies. If going for it increases variance more than expected strokes lost, prefer the conservative play unless you need birdie.

- Driver vs 3-Wood Decision: Use when driver gives >0.4 strokes gained expectation (depends on course and your dispersion).

- Approach Targeting: Aim at the side of the green that gives the highest makeable putt percentage, not always the pin.

- Putting Strategy: On fast greens,prefer lag putts to avoid 3-putts unless inside 8-10 feet where attacking yields higher birdie EV.

- Penalty Risk Tolerance: On high-penalty holes,reduce variance – play for par first,birdie second.

Strokes Gained: Practical Use and Interpretation

Strokes Gained is the single most useful performance metric-but it must be used correctly. Follow these practical guidelines:

- Track by category: Off-the-Tee, Approach, Around-the-Green, Putting. This highlights your true strengths/weaknesses.

- Look for trends over >10 rounds. One round fluctuation is noise.

- Use strokes gained to prioritize practice time: e.g., if Strokes Gained: Putting is -0.8/round,allocate more putting and green-speed practice.

- Compare strokes gained to course difficulty and playing conditions-windy or firm conditions will shift expected values.

Tools, Tech, and data Sources

Modern golfers have many options to collect the data required for analytical frameworks:

- Shot-tracking apps: Game Golf, Arccos, Shot scope – automate shot logging and generate strokes gained metrics.

- Launch monitors: TrackMan, GCQuad, Flightscope – provide dispersion, spin, launch angle useful for club-depth analytics.

- GPS & Yardage devices: for precise pre-round mapping and decision support.

- Excel/Google Sheets or Golf-Specific Dashboards: maintain a simple dashboard to display KPIs, trends, and heatmaps.

- Course Mapping Tools: Hole planners and aerial maps to define landing zones and trouble areas.

Benchmark Table: KPI Targets by Handicap (simple Reference)

| Handicap Range | GIR % | Driving Accuracy % | Putts / round |

|---|---|---|---|

| Scratch to 5 | 65%+ | 60%+ | 28-30 |

| 6 to 12 | 55-65% | 50-60% | 30-33 |

| 13 to 18 | 45-55% | 40-50% | 33-36 |

Benefits and Practical Tips

Top Benefits of a Data-Driven Approach

- Objective identification of strengths and weaknesses.

- Better allocation of practice time with measurable returns.

- Improved on-course decision making and risk management.

- faster progress toward realistic scoring goals and handicap reduction.

Actionable Tips You Can Use Today

- Log every shot for at least 10 rounds – accuracy beats guesswork.

- Create a simple dashboard: scoring average, GIR, driving accuracy, putts/round, strokes gained categories.

- Before each shot ask: “What’s the worst result and can I recover?” If recovery cost exceeds EV, choose a safer option.

- Practice with purpose: use data to build skill sets that create strokes gained (e.g.,60% of practice on approach distances that show biggest deficit).

- Carry a cheat sheet with your club distances and dispersion bands for quick on-course decisions.

Case Study: Turning Data into Lower Scores (Example)

Player: Mid-handicap golfer (12 handicap). Baseline metrics from 20 rounds:

- GIR: 50%

- Driving Accuracy: 48%

- Putts/round: 33

- Strokes Gained: Approach: -0.6, Putting: -0.4

Framework applied:

- Focused 6-week practice plan: 60% approach (middle-distance wedge distances), 20% short game, 20% putting drills for lag control.

- Pre-round strategy: use 3-wood on narrow par-4 tee shots to increase fairways hit; prioritize hitting the short side of greens to reduce 3-putt risk.

- Tracked outcomes: after 8 rounds, GIR increased to 56%, Strokes Gained: Approach improved by +0.5, putting improved by +0.2, scoring average dropped by 1.7 strokes.

First-Hand Experience: How I Use Analytics on the Course

I keep a minimalist approach: track tee location, club used, landing zone, result (GIR/No GIR), number of putts. That small dataset lets me compute a quick strokes gained snapshot and decide whether I need to bring driver or iron into a tight tee shot. over months, small adjustments in club selection and aiming points produced consistent sub-80 rounds.

Sample Weekly Practice Plan (Data-Led)

- Monday: Launch monitor session – measure 5 key clubs (driver, 3-wood, 7-iron, pitching wedge, sand wedge) dispersion & median carry.

- wednesday: 60% approach range work – focus on 30-120 yards with target scoring zones; record proximity.

- Friday: Short game – 50% up-and-down scenarios from 10-30 yards.

- Weekend: Play a round with shot-tracking app; review stats post-round and adjust practice allocation next week.

Common Pitfalls and How to Avoid Them

- Overfitting to small sample sizes: Don’t change your swing or strategy after one bad round; look for sustained trends.

- Ignoring context: Raw stats without course or weather context can mislead-normalize for conditions when possible.

- Neglecting mental factors: Data doesn’t remove pressure; practice decision-making under simulated stress.

- Paralysis by analysis: Keep your framework simple enough to be usable on the course.

Implementing Your Framework: Quick Start checklist

- Download a shot-tracking app or use a simple scorecard with shot notes.

- Collect 10 rounds of baseline data.

- Create a one-page dashboard of 4-6 KPIs and update weekly.

- Set one practice theme each week based on largest deficit.

- Apply one new on-course heuristic and measure its impact for four rounds.

further Reading and Next Steps

Explore shot-tracking platforms and strokes gained tutorials to deepen your understanding. If you want help building a tailored dashboard or interpreting your first 20 rounds, consider a short coaching consultation that focuses on data interpretation and on-course strategy rather than swing mechanics alone.