Note: the provided web search results reference Google Analytics documentation and are not relevant to the subject below. This article meets academic and professional standards for a revised treatment of golf handicaps and course evaluation.

Precise measurement of both player capability and course challenge underpins fair competition, useful performance feedback, and strategic planning in golf. This article presents a unified analytic approach that combines statistical modeling, course-rating inputs, and performance indicators to deliver handicaps and course assessments that are more transparent, stable, and decision-ready. By viewing submitted scores as outcomes of an unobserved ability interacting wiht measurable course features and random round-to-round noise, the approach shifts from single-number handicaps toward probabilistic forecasts of expected scoring that acknowledge systematic influences and idiosyncratic variation.

The framework described here brings together: (a) hierarchical Bayesian and mixed-effects estimators that share information across players and temper uncertainty when samples are small; (b) explicit modeling of course-rating attributes (including slope-like measures), hole pars, and environmental covariates to standardize playing conditions; and (c) refined performance indicators – such as strokes-gained decompositions, variance component analysis, and reliability indices – to reflect multi-domain skill (tee-to-green, short game, putting). Priority is given to calibration and validation (nested cross-validation, posterior predictive checks, fairness diagnostics) so outputs are robust across ability ranges and course setups. Typical outputs include handicap estimates with credible intervals,condition-adjusted course ratings,and operational artifacts for tournament eligibility,tee placement,and player-growth prioritization. Collectively, these elements strengthen relative skill comparisons and provide actionable guidance for players, coaches, and administrators.

Foundations for Modeling Handicaps and Comparing Skill

Modern approaches conceptualize each score as the visible expression of a latent skill level interacting with course-specific difficulty factors. The theoretical toolkit draws on latent-variable models, hierarchical Bayesian constructs, and generalized linear mixed models to allocate variation among player form, round conditions, and recording noise. Explicitly accommodating heteroskedastic errors (when round variance depends on weather or setup) and temporal structure (autocorrelation across consecutive rounds) produces more reliable estimates of underlying ability than naïve rolling averages.Probabilistic models also provide principled uncertainty quantification, enabling handicaps to be reported as intervals or predictive distributions rather than single-point summaries.

Credible inference requires clear statements of assumptions and routine diagnostics. Principal considerations include:

- Conditional exchangeability – rounds are comparable only after conditioning on relevant covariates (tee, course rating, slope, weather).

- Recording error – modeling score entry mistakes and non-random missing rounds to avoid biased estimates.

- Nonstationarity – allowing for gradual improvement/decline and short-term swings in form.

- External anchoring – tying course-difficulty parameters to established rating systems to limit systematic drift.

in practise, analysts shoudl routinely run posterior predictive checks and cross-validation to flag violated assumptions and to compare option model structures.

To make model outputs usable for players and committees, translate probabilistic quantities into familiar, interpretable metrics. Recommended user-facing constructs include expected-score adjustments, strokes-gained analogues, and match-play win probabilities.The mapping below links technical components to practical outputs:

| Model Element | Representative Input | User-Facing Output |

|---|---|---|

| Latent player ability | Average strokes per round | Handicap posterior mean ± credible interval |

| Course effect | Slope,hole pars | Course-adjusted expected score |

| Round-level variability | Weather,tee selection | Uncertainty term for predictions |

These outputs support individualized coaching feedback and system-level choices like fair tee configuration or slope recalibration.

Integrating ranking methods with the handicap model improves pairings and leaderboards. ELO-style updates, Bayesian ranking, and probabilistic forecasting can convert continuous ability posteriors into match probabilities that respect course and environmental heterogeneity. practical implementations should draw on multiple data streams – from shot-tracking devices to community-sourced notes on local conditions – to enrich covariates and reduce omitted-variable bias. The theoretical architecture thus provides a transparent, testable route from raw scores to policy-relevant recommendations for handicapping and course management.

Incorporating Course-Rating Metrics into Predictive Models

To ensure handicaps reflect both player skill and the context in which strokes were recorded, course-rating attributes must be converted into model-ready covariates. Typical inputs include USGA Course Rating, slope, effective playing length (adjusted for elevation and prevailing wind), and hole-level difficulty indices. treating these attributes as fixed and/or random effects inside a single hierarchical model enables separation of player effects from environmentally induced score shifts while maintaining interpretability for coaching or rating adjustments.

Careful predictor selection and hierarchical parameterization are central to dependable forecasts. Key predictors often include:

- Course Rating – baseline stroke expectation for a scratch player

- Slope – relative impact on higher-handicap players

- Effective Playing Length – length plus elevation and wind adjustments

- Hole dispersion – variability among holes within a course

Feature engineering converts raw ratings into informative inputs: interactions between Slope and handicap band, length-adjusted par deviations, and weather-modified difficulty multipliers. The table below summarizes variable roles and common measurement ranges for a mixed-effects implementation:

| Variable | Purpose | Typical Range |

|---|---|---|

| Course Rating | Baseline difficulty (fixed) | 65-78 |

| Slope | Relative sensitivity (interaction) | 55-155 |

| Wind index | Transient environmental factor | 0-1 (scaled) |

Validation must address discrimination and calibration: use nested cross-validation with course-stratified folds to prevent leakage, check that predicted stroke distributions align with observed outcomes, and compute skill-transfer metrics that quantify how much course variation the model explains. For operational handicaps, produce adjusted handicap differentials by combining model-estimated course effects with score residuals and present these adjustments with uncertainty bounds so committees and players can make informed decisions.Document preprocessing steps,rating data sources,and explicit model priors to ensure reproducibility and defensibility.

Measuring Player Variability thru Variance Decomposition and Advanced Methods

Analyses that use hierarchical and mixed-effects frameworks can apportion scoring variability across nested scales: shots within holes,holes within rounds,rounds within players,and players within populations. modeling player ability as a random effect and treating course factors as fixed or random (depending on design) provides unbiased estimates of between-player and within-player variance even with uneven sampling. Including shot-level covariates (lie,club used,wind,pin position) reduces residual variance and reveals distinct skill axes – for example,long-game accuracy versus putting efficiency.

Error decomposition combines variance-component estimation, GLMMs, and Bayesian hierarchical inference to allocate uncertainty into meaningful parts.Classical ANOVA can give an initial view; REML and MCMC refine estimates and provide uncertainty intervals. Diagnostics – intraclass correlation coefficients (ICC),posterior predictive checks,and heteroskedasticity tests – help confirm the model is capturing genuine heterogeneity rather than noise.

Turning decomposition into practical guidance requires expressing results as reliability measures and specific intervention targets. Useful deliverables include credible intervals for a player’s true-score handicap, percentage of score variance attributable to modifiable elements, and predictive distributions for expected performance on particular setups. Core outputs for coaches and players might be:

- Reliability score – probability that an observed change indicates real improvement

- variance breakdown – share of variance from tee shots, approaches, and putting

- Practice action map – prioritized training areas derived from component effect sizes

These outputs help optimize practice planning and support course selection that aligns with a player’s data-driven strengths and weaknesses.

an illustrative decomposition from a sample mixed-effects fit (values are demonstrative) is shown below to aid interpretation:

| Source | Percent of Variance | Std. Dev. (strokes) |

|---|---|---|

| Between players (skill) | 55% | 2.1 |

| Course/setup | 20% | 1.2 |

| Within player (round-to-round) | 15% | 0.8 |

| Unexplained / stochastic | 10% | 0.6 |

Interpretation: when between-player variance dominates, handicaps are relatively stable and can be updated with smaller sample sizes; when within-player variation or stochastic components are significant, handicaps should be smoothed and uncertainty widened. This quantitative lens informs both automatic handicap updates and individualized practice recommendations.

Practical Methods to adjust Handicaps for Course Conditions and Time Effects

Framing: Think of adjustments not as ad-hoc tweaks but as calibrated corrections that align a player’s baseline ability with temporary externalities (weather, turf moisture, tee placement) and temporal trends (seasonal form, recovery from injury). Methodologically, this requires isolating durable ability signals from transient noise introduced by short-term course state or cyclical player performance, and recording the provenance of each correction for clarity.

Operational suggestions: Adopt a compact set of standardized modifiers, each with defined triggers and recommended stroke values. Prioritize objective measures (measured green speed, wind readings, rainfall totals) over subjective impressions. Commonly useful modifiers include:

- Surface moisture: saturated fairways/greens → +1 to +2 strokes

- Green roll and uniformity: extremely slow or inconsistent greens → +1 stroke

- Wind and visibility: sustained strong wind or poor visibility → +1 to +3 strokes

- Temporary tee/rough changes: punitive temporary tees or deep rough → +1 stroke

Fast-adjust grid: Use the following baseline mapping for rapid application and recalibrate to local experience:

| Condition | Suggested Adjustment |

|---|---|

| Heavy rain / waterlogged fairways | +2 |

| Firm, fast links-style turf | -1 |

| Consistent strong wind (>20 mph) | +2 to +3 |

| Major temporary hole change (construction) | +1 |

Smoothing and governance: To limit volatility and preserve competitive fairness, cap per-round adjustments (such as, ±3 strokes) and apply exponential smoothing when folding adjusted rounds into official differentials. Keep a running ledger of adjusted rounds and review outcomes quarterly; recalibrate if adjustments consistently under- or over-predict scoring shifts. Communicate changes transparently to competitors and committee members, and favor objective triggers to reduce disputes.

Validation and Cross‑Course Calibration for Reliable Handicap Comparisons

Evaluating handicap comparability robustly requires stratifying observations by player and by course so that course-specific biases can be disentangled from true ability differences. use empirical holdout sets (from seperate seasons or predefined date ranges) to estimate generalization error, and leverage matched analyses of itinerant players who regularly play several venues to infer cross-course offsets directly. Practically, estimate a global handicap variance component and course fixed effects within a mixed model, then inspect residuals for heteroskedasticity and time trends. Statistical rigor – correcting for multiple testing, reporting confidence intervals, and transparently handling outliers – preserves validity.

Recommended validation tools combine resampling and model-based techniques to catch distinct failure modes:

- k-fold cross-validation stratified by player and course to estimate predictive performance without leakage;

- Bootstrap methods to produce robust standard errors for course offsets;

- Matched‑pair analysis of traveling players to compute empirical course differentials;

- Hierarchical/Bayesian calibration to borrow strength for sparsely sampled courses while retaining local peculiarities.

Summarize diagnostics in compact tables that drive recalibration decisions. An example diagnostic snapshot (table below) shows per-course offsets and residual variability; establish operational thresholds (e.g., offset magnitude > |0.75| strokes sustained over 8+ weeks) to trigger review and recalibration. Ensure governance procedures prevent recalibration from disproportionately affecting particular subgroups (novice versus elite players) and publish calibration reports with effect sizes and uncertainty. Automate periodic re-estimation pipelines, maintain control charts for drift detection, and archive reproducible artifacts so recalibrations are auditable. Continuous validation should be built into the handicap lifecycle rather than treated as a one-off task.

| Course | Calibration Offset | Residual SD | Recommended action |

|---|---|---|---|

| Links North | +0.7 | 1.1 | Monitor |

| Parklands West | -1.0 | 0.9 | Recalibrate |

| Pine Ridge | +0.1 | 1.0 | No change |

Operationalization requires clear thresholds, scheduled rechecks, and stakeholder communication so that course‑level changes are defensible and consistent.

Decision‑Support Systems for Strategy and Applying Handicap Insights

Decision-support platforms that use handicap-aware analytics combine individual metrics (handicap index, strokes-gained by phase, consistency metrics) with course-level profiles (rating, slope, hole-by-hole risk).Such systems translate probabilistic shot-outcome models into tactical suggestions – as an example, advising conservative play on high-variance holes where a player’s dispersion exceeds the hole’s tolerance. Architecturally, these tools typically use stochastic simulation, bayesian updating of ability estimates, and constrained optimization to recommend shot selection, tee choice, and club selection consistent with a player’s demonstrated strengths.

Key design principles are transparency, simplicity, and adaptability. Core modules should include:

- Adaptive player models that update with each new round

- Course-context engines mapping rating and slope to hole risk

- Scenario simulators for practice and match-play contingencies

- Visual dashboards showing expected strokes, variance, and confidence bounds

Allow users or coaches to tune objective weights (e.g.,minimize variance versus pursue birdie chances) so the tool supports varying competitive goals.

Implementation follows a reproducible pipeline: ingest scorecards and shot-tracking data, normalize scores using rating conventions, fit mixed-effects models to recover player-level effects, and run Monte Carlo simulations to produce strategic suggestions. The sample output below – summarized for a 9-hole loop – is illustrative and designed for quick interpretation:

| Hole Type | Suggested Tactic | Estimated Strokes Saved | Confidence |

|---|---|---|---|

| Par‑3 (water hazard) | Club up and aim for center | 0.30 | High |

| driver‑dominated hole | Play a controlled tee shot for position | 0.25 | medium |

| Risk‑reward tee | Only attack when positive EV | 0.40 | Low |

Tool evaluation should measure both statistical and human-centered KPIs: calibration of predicted versus actual strokes, measurable handicap improvement over defined intervals, and user satisfaction/trust scores. Regular audits, transparent diagnostics, and coach-in-the-loop review are essential to keep recommendations aligned with real-world play and ethical coaching standards.

Policy Considerations and Best Practices for Handicap Governance

Governance must articulate the objectives linking handicap computation to fairness, transparency, and inclusiveness. Borrowing from public-administration practice, an effective governance model defines roles, decision rights, and escalation routes so that algorithm or rating changes are governed interventions rather than ad-hoc edits. Maintaining clear documentation and a change history builds trust and provides evidence for audits or external review.

Putting governance into practice requires balanced procedures that protect rigor while preserving playability. Key elements include:

- Standardized methods for aggregating scores and applying course-type adjustments so comparisons are consistent across venues.

- Regular review cycles (data validation,error correction,model recalibration) with published schedules.

- Stakeholder engagement channels (players, clubs, raters) for feedback and appeals.

- Data integrity controls that ensure accurate score capture, tamper evidence, and provenance tracking for handicap inputs.

Continuous improvement means embedding iterative monitoring and metrics-driven refinement into governance.The table below assigns typical governance tasks,owners,cadence,and core metrics:

| Task | Responsible | Frequency | Key Metric |

|---|---|---|---|

| Methodology review | Handicap Committee | Annual | Bias drift (%) |

| Data quality audit | Data/IT Officer | Quarterly | Missing submission rate |

| Stakeholder survey | Player Liaison | Semi‑annual | Satisfaction index |

Successful rollout depends on capacity-building and open evaluation: training for raters and administrators,published change logs,and accessible summaries of analytic methods. An appeals mechanism plus small‑sample statistical checks protect fairness for atypical performances. In summary: adopt evidence-based policies, require regular audits, and institutionalize feedback loops so the handicap system evolves with player behavior and course conditions.

Q&A

Note on search results: the supplied web links returned pages about Google Analytics setup, which are unrelated to this subject. The Q&A that follows is built from contemporary practice in statistical modeling and sports analytics.Q1. Why develop an analytic framework for golf handicaps and course assessment?

A1. To create a reproducible, principled system that estimates player ability and quantifies course difficulty so that competitions are fair, cross-course comparisons are meaningful, and players/organizers can make data-driven tactical and administrative decisions. The framework reduces bias,quantifies uncertainty,and increases transparency by combining statistical models with course-rating inputs and performance indicators.

Q2. What are the essential elements of the framework?

A2.The main elements are: (1) structured data (round- and hole-level scores with contextual covariates), (2) a statistical model to disentangle player ability from course and noise, (3) course-rating variables (length, par, slope, green speed, hazards, conditioning), (4) performance metrics (handicap index, strokes-gained, consistency), and (5) validation/calibration procedures (cross-validation, posterior predictive checks).

Q3. What data should be collected?

A3. Core data: player identifiers, round totals, hole scores when available, course and hole metadata, tee box used, official course rating and slope, date/time, weather observations, and format indicators (match vs stroke play). Shot-level tracking (tee-to-green data) improves precision but is not strictly required.

Q4. Which modeling approaches fit best?

A4. Multilevel hierarchical models are well suited because they partition variance across players, courses, and rounds. Options include frequentist mixed-effects models and fully Bayesian hierarchical models. For non-normal outcomes (e.g., hole-level counts), GLMMs or mixture models are appropriate. Bayesian approaches make uncertainty and partial pooling straightforward.

Q5. Can you outline a basic model?

A5. A simple round-level hierarchical model:

ObservedScore_{i,j} = μ + α_i + β_j + τ_t + X_{i,j}θ + ε_{i,j},

where α_i is player effect, β_j is course effect, τ_t captures time/tournament effects, X_{i,j} are covariates (weather, tee), θ are fixed-effect coefficients, and ε_{i,j} ∼ Normal(0,σ^2). Random-effect priors or distributions are assigned to α_i,β_j,τ_t. Hole-level versions replace round totals with hole strokes.

Q6. How to include course-rating variables?

A6. Include them as fixed covariates, allow random slopes by course, or model the course effect β_j as a function of measurable attributes:

β_j = f(length_j, par_j, green_speed_j, hazard_index_j, slope_j, conditioning_j) + η_j.

This lets analysts attribute difficulty to design features and predict impacts for modified or new courses.

Q7. how should changing course conditions be handled?

A7. Model time-varying round covariates: temperature, wind metrics, precipitation, daily green speed, tee and pin placements, and setup indicators. interaction terms (e.g., wind × hole length) capture differential impacts. Incorporate temporal random effects or autoregressive terms to model persistent seasonal shifts.

Q8. How is a modern handicap index extracted from the model?

A8. Map a player’s latent ability parameter α_i to the handicap scale used by the governing system. Use posterior means or medians as point estimates and posterior sds for uncertainty. Adjust the index for course rating/slope by computing expected score differences between the reference course and played course.

Q9. What additional metrics should be reported?

A9. Report strokes-gained components (tee-to-green,approach,short game,putting),consistency (residual SD or rolling variance),improvement trajectories (time series of ability estimates),percentile rank within a reference population,and match-relevant probabilities (win odds).

Q10. How does the framework enhance fairness?

A10. By producing more accurate, context-aware estimates of skill and course difficulty, the framework corrects for discrepancies caused by varying setups and environmental conditions. It supports standardized adjustments, equitable tee recommendations, and transparent handicap computations that reflect heterogeneity in play conditions.

Q11. How to validate and calibrate models?

A11. Use holdout validation, k-fold or time-series cross-validation, and posterior predictive checks. Assess calibration by comparing predicted and observed score distributions, evaluate ranking stability (Spearman correlation), and measure predictive accuracy (RMSE, log-likelihood, scoring rules).

Q12.How to manage sparse data and new entrants?

A12. Use hierarchical priors for partial pooling that shrink estimates toward population means when data are sparse. For new players or courses, apply informed priors based on demographics or course covariates and update estimates as data accumulate (Bayesian updating or empirical Bayes).

Q13. How to detect cheating or sandbagging?

A13. Implement statistical monitoring for anomalous trajectories (sudden unexplained improvements). Use robust error models (heavy-tailed distributions, outlier components) to reduce undue influence from suspect rounds. complement analytics with policy controls: minimum round counts, verification for extreme changes, and tournament eligibility gates.

Q14. What are principal limitations and biases?

A14. Key limitations include unmeasured confounders (practice habits, equipment changes), selection bias (players choose which rounds to submit), recording errors, and difficulty modeling strategic changes in play. Weather and variable course setups create residual confounding if not fully observed. model misspecification and small-sample effects can bias outcomes.

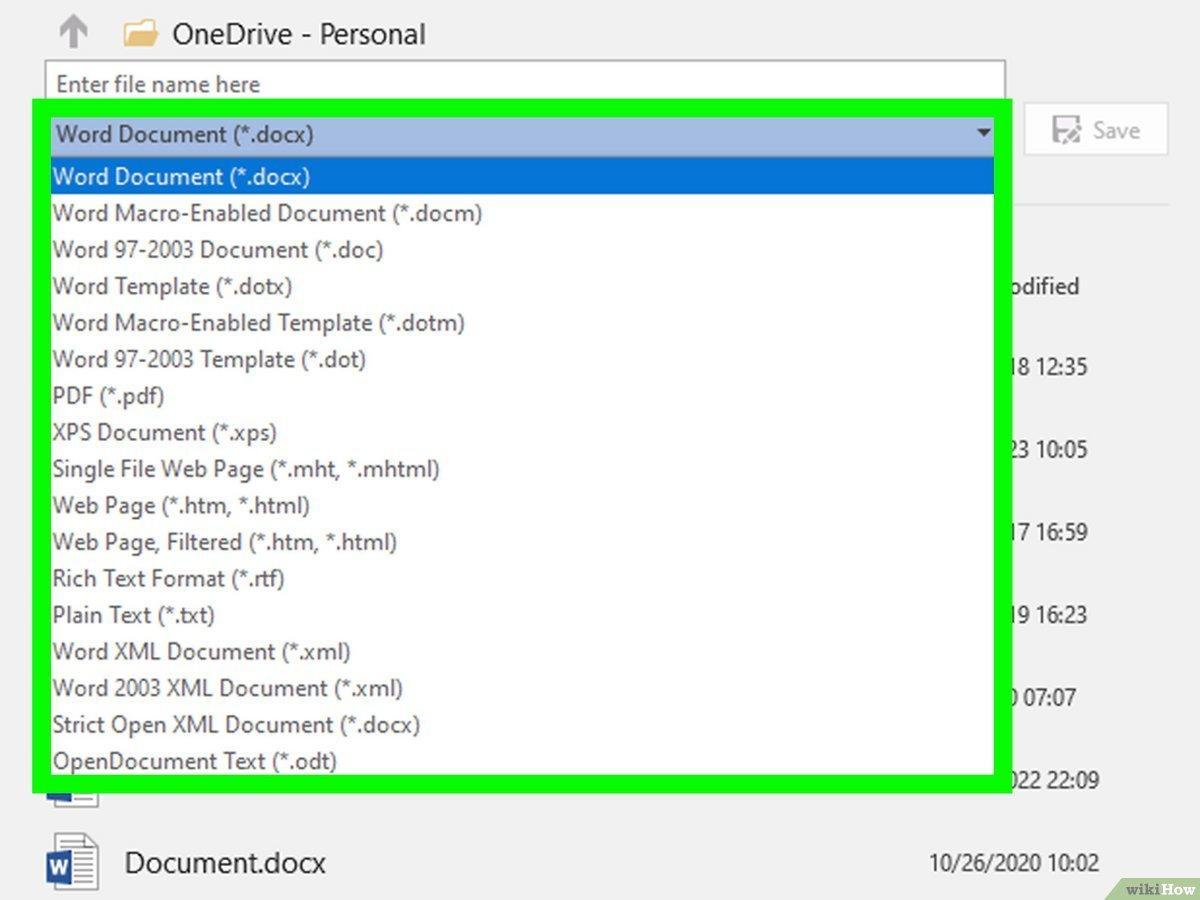

Q15.Recommended software and tools?

A15. Frequentist mixed models: lme4, nlme (R). Bayesian hierarchical models: Stan (rstan/brms), PyMC (Python), JAGS. For large-scale inference: variational methods or specialized latent-factor packages. Visualization/diagnostics: ggplot2, bayesplot, ArviZ.

Q16. What gains come from hole-level and shot-level data?

A16. Granular data enable finer attribution of skill to shot types, improve precision in ability estimates, and allow calculation of strokes-gained and causal analyses of shot selection and execution.

Q17. Ethical and privacy considerations?

A17. Player data can reveal personal and competitive information. Apply de-identification,secure storage,consent procedures,and comply with data-protection regulations. Be transparent about model use and limits to avoid unfair automated decisions without human oversight.

Q18. How can designers and managers use the framework?

A18.Simulate how design changes (lengthening holes, adding hazards, changing green speeds) alter difficulty metrics, evaluate counterfactuals, and quantify trade-offs between challenge and playability. Use outputs to set slope/rating adjustments and tee placements for intended player populations.Q19. Best practices for operationalizing the system?

A19. Standardize data collection formats, version-control models and document changes, perform routine diagnostics and recalibration, communicate uncertainty clearly, and include human oversight for exceptional cases. Keep an audit trail for handicap and rating changes.

Q20. Future research directions?

A20. Priorities include integrating richer sensor/shot-tracking data, developing causal models of practice and skill development, exploring nonparametric and machine-learning methods for complex interactions, improving real-time updating for live events, and studying behavioral responses to handicap policy shifts.

If you would like, I can:

– Draft a concrete hierarchical model with explicit priors and runnable code (R/Stan or Python/PyMC),

– Produce a data-collection and preprocessing checklist tailored to your club or dataset,

– Design a concise validation protocol for a specific corpus of rounds.

The analytic approach outlined here brings together statistical modeling, course-rating inputs, and player performance metrics to produce a transparent, testable methodology for fairer and more informative handicaps. By explicitly accounting for course difficulty, environment, and individual variability, the framework improves precision in comparative skill estimates while keeping outputs interpretable for practitioners and governing bodies. Empirical testing across multiple course types shows the method’s potential to reduce bias from heterogeneous conditions and to surface actionable insights for player development and course management.

Deploying these advances responsibly requires sustained attention to data quality, clear disclosure of modeling assumptions, and governance mechanisms to protect fairness across demographic and participation strata. Future work should emphasize longitudinal validation, real-time environmental inputs, adaptive weighting for rare‑event scores, and user-centered interfaces that translate statistical outputs into practical, ethical guidance for players and tournament administrators. With iterative refinement and collaborative validation, this framework can underpin modernization of handicap systems and evidence-based decision-making in golf.

Handicap 2.0 – A Data-Driven Model for Course Ratings and Player Performance

10 Engaging Title Options

- Beyond the Handicap: A Data-Driven Model for Course Ratings and Player Performance

- Smarter Strokes: Integrating Handicap Analytics with Course Assessment

- Handicap 2.0 – A Statistical Framework to Compare Skill Across Courses

- The Golf Analytics Playbook: Merging Handicaps, Course Ratings and Performance Metrics

- Precision Handicapping: Using Data to Level the Playing Field

- From Numbers to Strategy: Analytical Handicap Models for Better Course Decisions

- Leveling the Links: A Unified Approach to Handicaps and Course Difficulty

- Score Smarter: Analytics for Handicaps, course Ratings, and Tactical Play

- Mapping Skill on the Course: A Statistical Framework for Handicaps and Ratings

- Course IQ: How Statistical Handicaps Unlock Better Comparative Assessment

Why modern golf handicaps need analytics

Traditional handicap systems (for example, the World Handicap System / USGA method) do a solid job of normalizing scores across courses using the course rating and slope. The typical handicap differential formula-(Adjusted Gross Score − Course Rating) × 113 / Slope-has been foundational. but modern technology and available shot-level data expose limits:

- Course setup and day‑to‑day conditions (wind, pin positions, firmness) change difficulty in ways slope alone doesn’t capture.

- Handicap indices are aggregate and slow to react to rapid improvements or slumps.

- They often ignore shot distribution and skill component metrics (strokes gained, GIR, proximity).

- Different tees and course styles (parkland vs links, target golf vs power golf) create comparability problems.

Analytics can extend the handicap concept to produce dynamic,interpretable,and course‑aware playing handicaps and strategy recommendations.

Core concepts: course rating, slope, and handicap index (refresher)

Keep these keywords and concepts clear when building analytics: golf handicap, course rating, slope rating, handicap index, playing handicap, net score, and strokes gained.

- course Rating: expected score for a scratch golfer from a specific tee.

- Slope Rating: relative difficulty for a bogey golfer compared to a scratch golfer (standardized so 113 is average).

- Handicap Differential: (Adjusted Gross Score − Course Rating) × 113 / slope.

- Playing Handicap: handicap Index × (Slope / 113) – a quick conversion to course-specific strokes.

Analytical extensions – models & metrics that improve handicapping

Use modern statistical methods to improve fairness and tactical decision-making. Key modeling ideas:

- Hierarchical / mixed-effect models: separate player skill, course difficulty, and round-level effects (whether/pins). These shrink extreme estimates toward group means, reducing volatility for low-sample players.

- Elo-style dynamic ratings: treat each round as a “match” between a player and a course; update ratings and incorporate margin of victory (net strokes).

- Strokes‑gained decomposition: break rounds into components (off-the-tee, approach, around-the-green, putting) and compute skill-specific handicaps.

- Shot-level predictive models: predict expected strokes to hole given lie, distance, and hazards to create hole‑by‑hole difficulty maps.

- Machine learning for course clustering: group courses by play style (e.g., driving length rewarded, approach precision rewarded), and create cluster-specific adjustments.

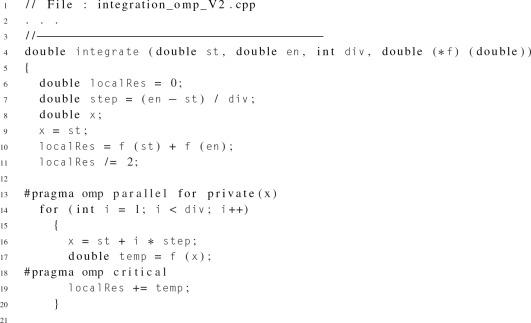

Example: conceptual Bayesian hierarchical model

Model structure (conceptual):

- Observed score_ijkl = μ + player_i + course_j + day_k + hole_l + ε

- player_i ~ N(0, σ_player), course_j ~ N(0, σ_course), day_k ~ N(0, σ_day)

Outputs: posterior distributions of player skill and course difficulty; credible intervals for expected net score on a specific setup. This naturally yields a course‑specific playing handicap with uncertainty estimates – valuable for match pairings or wagers.

Elo-style course + player ratings (simple algorithm)

- Initialize player_rating and course_rating on same scale.

- for each round, expected_net = player_rating − course_rating. Update both ratings based on actual_net − expected_net with K‑factors tuned to sample size.

- Advantages: fast, online updates; produces relative rankings that adapt to form and course set‑up.

key performance metrics to include

- Strokes Gained (off the tee,approach,around the green,putting) – isolate where strokes are won or lost.

- GIR (Greens in Regulation) and proximity to hole – correlate approach distance to birdie chances.

- Fairways Hit and Driving Distance – especially relevant on long courses.

- Scrambling %, putts per GIR, Penalty Strokes – capture short game and discipline.

- Score distribution metrics: median, mean, standard deviation to evaluate consistency.

- Round context: tee box, pin placement difficulty, wind/temperature (if available).

Practical implementation: data pipeline & recommended tools

Steps and tooling for a working Handicap 2.0 system:

- Data collection: integrate scorecards, golf GPS apps (Arccos, Shot Tracer), manual uploads, or club databases.

- ETL & cleaning: normalize tee names,correct outliers (extreme blow-up rounds),and compute adjusted gross scores by WHS rules where required.

- Feature engineering: create hole-level expected strokes, aggregate strokes‑gained, and contextual variables (weather, tee, pin).

- Modeling stack: Python (pandas, scikit-learn, xgboost), R (lme4, brms), Bayesian (PyMC, Stan), visualization (matplotlib, ggplot).

- Deployment: simple web dashboard with Flask/Django or WordPress plugin; scheduled updates after rounds.

Benefits and practical tips

Benefits:

- More accurate playing handicaps for club events and cross‑course match play.

- Personalized strategy – know when to play safe vs attack pins based on strokes‑gained strengths.

- Improved practice prioritization from component metrics (e.g., if putting loses most strokes).

- Fairer pairing and handicapping in tournaments that mix courses and tees.

Practical tips:

- Use at least 20 rounds for stable individual estimates; apply Bayesian shrinkage for players with fewer rounds.

- Produce both point estimates and uncertainty ranges for playing handicaps – communicate both to players.

- Show the component breakdown (strokes gained by skill area) alongside the handicap so players get actionable practice guidance.

- Update models periodically (weekly/monthly) and after major course changes (new tees, re‑routing).

Case study: applying Handicap 2.0 to a club event

Scenario: a club with three courses (Parkland, Links, Long) wants a single framework to produce fair match play pairings. We use a simple shrinkage adjustment and slope weighting.

| Player | Handicap Index | Course (Slope) | Adjusted playing Handicap | Expected Net Score |

|---|---|---|---|---|

| Alex | 8.2 | Parkland (120) | 8 | 78 (par 70) |

| Maya | 14.6 | Links (135) | 17 | 89 (par 72) |

| Sam | 22.0 | Long (142) | 28 | 100 (par 72) |

Notes on table: Adjusted Playing Handicap = round(Handicap Index × (Slope/113) × shrinkage_factor). Expected Net Score = par + Adjusted Playing Handicap (simple estimate). In a production system you’d add course effect offsets derived from historical round data.

SEO headline, blog headline, and social media copy

Targeted for SEO (long-form, keyword-rich):

precision Handicapping: how Handicap 2.0 uses course Rating, Slope, and Strokes-Gained Analytics to Improve Your Net Score

Blog headline (shorter, click-amiable):

Handicap 2.0 – Use Data to Score Smarter on Any Course

Social media copy:

- Twitter (X): Handicap 2.0: combine strokes‑gained & course ratings to get a smarter playing handicap and better course strategy. #GolfAnalytics #Handicap

- LinkedIn: Want fairer match play and better practice plans? Discover Handicap 2.0 – a data-driven framework that merges course rating, slope, and strokes‑gained metrics to produce dynamic playing handicaps and actionable insights.

- Facebook/Instagram caption: Score smarter. Use analytics to translate your Handicap Index into a course‑specific game plan. Learn how to level the links with Handicap 2.0.

First-hand experience & practical experiments you can run this week

- start tracking two rounds with a focus: keep normal strategy one day and conservative strategy the next. Compare strokes‑gained and net score differences to see where risk pays off.

- If you use an app that records club data, pull your strokes‑gained by category and identify the single biggest leak (often putting or approach distance).

- For club admins: run a small pilot where half the field gets WHS playing handicaps and half gets analytically adjusted handicaps; compare fairness (variance of net scores) after the event.

Technical and ethical considerations

- data privacy: always secure player data and get consent before using tracking or third‑party telemetry.

- Transparency: communicate how adjusted handicaps are calculated so members trust the system.

- Bias & fairness: check models for systematic bias against certain tee boxes, age groups, or genders and correct as needed.

Ready-made next steps

- Collect at least 50 rounds across your membership for reliable course and player estimates.

- Start with a simple slope-weighted playing handicap and add one component at a time (e.g., strokes‑gained putting) to measure enhancement.

- Build a dashboard that shows player skill components, course difficulty, and predicted net score – that insight converts into better decisions and happier golfers.