Introduction

The performance of golf equipment-most notably clubheads, shafts, and grips-exerts a determinative influence on shot outcome, player consistency, and injury risk. Recent advances in materials science,manufacturing,and computational modelling have expanded the design space for clubs and accessories,but they have also increased the complexity of assessing how specific geometric,dynamic,and ergonomic features translate into on-course performance. Consequently, there is a pressing need for rigorous, reproducible evaluation protocols that link measurable equipment properties (e.g., clubhead center-of-gravity location, moment of inertia, coefficient of restitution; shaft stiffness, frequency and damping characteristics; grip shape and pressure distribution) to ball-flight metrics (ball speed, launch angle, spin, dispersion) and to human-centred outcomes (stroke repeatability, comfort, and control).

This article develops and applies an evidence-based framework for the rigorous evaluation of golf equipment performance. We synthesize laboratory techniques (high-speed impact testing, finite-element and multibody simulation, modal analysis, and instrumented mechanical rigs) with field-based assessments (radar/optical launch monitors, motion-capture kinematics, and controlled player trials) to quantify causal relationships and measurement uncertainty. Emphasis is placed on standardized test conditions, statistical power, and the transparency of data-processing pipelines to enable reproducibility and cross-study comparability. By integrating geometric,dynamic,and ergonomic perspectives,the framework aims to inform designers,fitters,and researchers about trade-offs between distance,accuracy,feel,and regulatory compliance,and to provide a methodological basis for future work on optimizing equipment for diverse player populations.

Theoretical Framework and Experimental Protocols for Golf Equipment Evaluation

Contemporary evaluations of golf equipment rest on a coherent theoretical scaffold that reconciles classical mechanics with human biomechanics and materials science. in this scaffold, **hypothesis-driven models**-ranging from rigid-body impact theory to viscoelastic shaft behavior-define expected causal pathways between design variables and performance outcomes. Theoretical constructs are used as heuristic devices to translate qualitative design intuition into quantifiable parameters; as commonly defined in the literature,”theoretical” refers to propositions and frameworks that may be tested empirically rather than immediate practical prescriptions. This distinction underpins a staged approach in which models generate testable predictions that guide experimental design and instrumentation choices.

The conceptual model employed for rigorous testing isolates three principal domains: **clubhead geometry**, **shaft dynamics**, and **grip ergonomics**-each interacting with player kinematics and ball properties. Primary constructs and testable hypotheses include:

- Moment-of-inertia effects: larger MOI reduces directional dispersion under off-center impacts.

- Frequency tuning: shaft modal characteristics influence energy transfer and launch conditions.

- Contact interface: grip shape and surface properties mediate clubface orientation consistency.

These constructs drive the selection of dependent metrics (e.g., ball speed, spin, dispersion) and covariates (e.g., impact location, swing speed) for subsequent empirical evaluation.

Experimental protocols prioritize repeatability, control, and ecological validity. Standardized laboratory procedures include calibration of launch monitors, use of mechanical impactors for isolating equipment behavior, and human-subject testing with randomized club sequences to control learning and fatigue. Key procedural elements are:

- Environmental control: indoor facilities with wind-neutral conditions and temperature monitoring.

- Replication: minimum trial counts per condition to ensure stable within-club estimates.

- Instrumentation: synchronized high-speed cameras, motion-capture markers, and strain gauges on shafts.

Adhering to these protocols reduces confounds and enables direct comparisons across equipment designs.

Data acquisition must balance precision with interpretability. typical outcome metrics are ball speed, launch angle, spin rate, smash factor, and lateral dispersion; processing pipelines employ noise-filtering, impact-location correction, and advanced statistical modeling (e.g., mixed-effects regressions, equivalence testing). The table below summarizes common pairings of metric and measurement modality used in controlled evaluations.

| Metric | Instrument | Typical Precision |

|---|---|---|

| Ball speed | Radar/photonic doppler | ±0.3 mph |

| Spin rate | High-speed camera + analysis | ±50 rpm |

| Impact location | Face sensor / impact tape | ±2 mm |

Validity, transparency, and ethical considerations complete the framework. Internal validity is safeguarded through randomization and blinding of club order where feasible; external validity is addressed by complementing mechanical rig data with representative player samples. Researchers should document calibration procedures, preregister hypotheses when possible, and make anonymized datasets available to enable independant verification. Practical recommendations include:

- Pre-registration of hypotheses and analysis plans.

- Calibration logs accompanying published performance tables.

- Open data to foster reproducibility and meta-analyses.

Such practices ensure that equipment evaluations are not only rigorous but also cumulative and scientifically reproducible.

Quantitative Analysis of Clubhead Geometry: Impacts of Face Curvature, Mass Distribution and Aerodynamics with Fitting Recommendations

Quantifying the influence of face geometry requires treating face curvature (bulge and roll), local coefficient of restitution (COR) variations, and effective radius as measurable variables. Empirical studies and bench tests show that face curvature modifies the azimuthal launch vector and side spin produced at off‑center strikes: increased bulge radius reduces lateral launch error by redistributing contact normals, while roll curvature alters vertical launch on toe/heel offsets. Measured parameters of interest include: face radius (mm), local COR (%), off‑axis spin sensitivity (rpm/mm), and launch-angle deviation (deg/mm). From a modeling standpoint, the relationship is nonlinear; a 1 mm lateral offset can produce a 50-200 rpm difference in side spin depending on curvature and local COR, translating into measurable lateral dispersion at typical carry distances.

Mass distribution is usefully summarized by center‑of‑gravity (CG) coordinates (x, y, z from the face), moment of inertia (MOI) about the vertical and horizontal axes, and discrete movable mass locations. Higher MOI values (typical driver ranges span approximately 3000-7000 g·cm2 in commercial measurement systems) correlate with reduced angular acceleration from off‑center impacts and therefore smaller mis‑hit dispersion. Lower CG height and aft CG positions increase launch angle and reduce peak spin when paired correctly with loft; conversely, forward CG yields lower spin and a flatter trajectory. Quantitatively,shifting 5-10 g of rearward mass often raises carry by measurable increments (typically 0.5-2% in carry for controlled test populations) by increasing effective launch and reducing spin decay.

Aerodynamic forces are governed by frontal area, shape streamlining, and surface texture (including dimpling or turbulators). the two primary nondimensional metrics are the drag coefficient (Cd) and lift coefficient (cl); for modern driver geometries Cd values commonly range from ~0.2 to ~0.5 depending on Reynolds number and yaw angle. CFD and wind‑tunnel analysis show that small reductions in cd (≈0.02) at tournament swing speeds can increase carry by several meters due to reduced deceleration in flight. Vital measurable outputs include yaw‑dependent Cd(ψ), spin‑induced lift, and the rate of deceleration (m/s2). For fitting, aerodynamic gains are most pronounced for players with high ball speed (>55 m/s) and shallow launch angles where flight time dominates distance.

Robust evaluation integrates bench metrics, flight data, and statistical inference. Recommended protocol: (1) establish baseline with launch‑monitor metrics (ball speed, launch angle, total and side spin, smash factor, vertical and horizontal launch), (2) perform controlled off‑center impact matrix across toe/heel and high/low locations, (3) record aerodynamic response with yaw sweeps in CFD or a yaw‑variable tunnel, and (4) fit multivariate linear or nonlinear regression models predicting carry and dispersion from geometry and mass variables. Experimental design should target sufficient repeated strikes (minimum n=30 per configuration under controlled conditions) to permit confidence intervals on mean carry and lateral dispersion, and to detect practical effect sizes (e.g., 1-2 m carry improvements or 5-10% reduction in dispersion).

Practical fitting recommendations employ a decision matrix linking player profile to geometry and weighting adjustments. The short table below summarizes typical recommendations by player archetype; use it as a starting point for iterative validation on‑course with a launch monitor and controlled wind conditions.

| Player Profile | Primary Adjustment | Expected Effect |

|---|---|---|

| High speed, high spin | Forward CG, lower loft | Lower spin, flatter flight |

| Moderate speed, erratic dispersion | Higher MOI, rear weighting | Reduced dispersion, higher launch |

| low speed, low launch | Lower CG height, higher loft | Higher launch, increased carry |

Key actionable steps:

- Baseline measurement: quantify ball speed, launch, spin and dispersion.

- Adjust mass in 2-5 g increments and retest; log CG shifts and resulting launch/spin changes.

- Modify face curvature selection (where available) if systematic toe/heel miss impacts dominate dispersion metrics.

- Validate aerodynamics for high‑speed players by comparing heads in representative yaw and wind conditions.

Shaft Dynamics and Material Science: Frequency Response, Torsional Stiffness and Recommended Taper Profiles for Shot Shape Control

Frequency response of a shaft defines its dynamic bending behavior during the swing and is a primary determinant of timing, perceived feel, and interaction with the clubhead. Measured in cycles per minute (CPM) or hertz (Hz),resonant frequency correlates with length,wall thickness,and modulus of the material. Higher resonant frequencies (e.g., ~260-320 CPM / 4.3-5.3 Hz for many modern driver shafts) produce a “stiffer” temporal feel that benefits high angular-velocity players by reducing excessive tip deflection at impact; lower frequencies (~180-240 CPM / 3.0-4.0 Hz) often help moderate-speed players achieve easier release and greater dynamic loft. Accurate evaluation requires a calibrated frequency analyzer and repeatable clamping geometry to isolate bending modes from torsional and shear contributions.

Torsional stiffness governs the shaft’s resistance to twist about its longitudinal axis and directly affects face angle stability and shot dispersion at ball contact. Torque figures are typically reported in degrees (°) of twist under a standardized load; lower torque (e.g., 2.5-3.5°) constrains face rotation and favors predictable shot shapes for players with high rotational accelerations, while higher torque (e.g., 4.5-6.0°) can promote feel and mitigate gear-effect mis-hits for slower swingers. consider the following practical classifications when selecting shaft torsion characteristics:

- low torque (≤3.5°): Elite/fast swingers seeking reduced dispersion and sharper shot-shape control.

- Medium torque (3.6-4.5°): All-around players needing a balance of stability and feel.

- High torque (≥4.6°): Moderate-speed players prioritizing comfort and forgiveness.

Profile geometry-parallel, tapered, and stepped tapers-interacts with material layup to shape tip stiffness, kick point, and energy transfer. A stiffer tip (often achieved via reduced taper or butt-biased layup) resists tip flex and can check the release, aiding fade control and face-angle stability on fast transitions. conversely, a softer-tipped, more continuously tapered geometry increases tip flex and launch, which tends to promote a stronger release and can assist draw-biased players. When implementing taper strategies for deliberate shot-shape manipulation, designers frequently combine taper morphology with strategic ply orientations (±45° bias, high-modulus carbon in the butt section) to tune both bending and torsional responses without sacrificing overall shaft durability.

The optimal combination of frequency,torsional stiffness,and taper profile depends on measurable swing characteristics. The table below condenses recommended starting points for iterative fitting based on swing speed and release tendencies. Use these as hypotheses to be validated on a launch monitor with high-speed video and a frequency/torque bench.

| Player Profile | Target CPM (Driver) | Torque (°) | Suggested Taper |

|---|---|---|---|

| High-speed, open-face tendency | 280-320 | 2.5-3.5 | Reduced taper / stiff tip |

| Medium-speed, neutral release | 240-280 | 3.5-4.5 | Parallel or mild taper |

| Moderate-speed, closed-release | 200-240 | 4.5-6.0 | Continuous taper / softer tip |

Empirical fitting must combine lab-grade metrics with on-course validation. Recommended protocol: measure static frequency and torque on a calibrated bench, capture launch parameters (ball speed, launch angle, spin, and dispersion) across a matrix of shaft options, and perform statistical comparison (mean, standard deviation, and dispersion ellipse).Key fitting caveats include manufacturing tolerances in ply thickness,temperature-dependent modulus shifts for composite materials,and the nonlinear coupling between bending and torsion at high loads. Prioritize repeatability of measures and adopt an iterative, evidence-based approach to converge on a shaft solution that achieves both objective performance targets and the subjective feel required for consistent shot-shape control.

Grip Ergonomics and Interface Mechanics: pressure Distribution, Tactile Feedback and Prescriptive Sizing Strategies

Optimal performance begins with a deliberate distribution of contact forces across the grip interface. Empirical studies using pressure-mapping systems demonstrate that excessive concentration of pressure on the dorsal-or palmar-regions of the hands correlates with increased clubface rotation and inconsistent launch parameters. Thus, designers and fitters should target a **balanced pressure envelope** that minimizes peak pressures while maintaining sufficient normal force for control; typical target ranges measured in skilled golfers fall between 1.5-3.5 N·cm−2 per contact zone during the address and into the downswing.

Tactile feedback functions as the primary channel for sensorimotor regulation during the swing. Microtexture, hardness gradient, and vibration transmissibility of grip materials modulate afferent signals and alter reflexive muscle recruitment patterns. High-friction microtextures improve initial hand-seat stability but can mask slip onset by attenuating micro-slip cues; conversely, slightly compliant, high-frequency transmissive materials preserve vibrotactile cues that inform subtle grip pressure modulation. In short, **material selection is an energetic trade-off** between slip resistance and informational transparency to the somatosensory system.

Prescriptive sizing strategies must integrate anthropometrics, swing kinematics, and skill-level. Standard procedures include measuring hand span and longest-finger-to-palm distance, then selecting diameter adjustments in 1/64″-1/16″ increments. Practical prescriptions: players with reduced wrist hinge or small hand span often benefit from +1/32″ to +1/16″ diameters to reduce excessive forearm tension; power swingers with large hands may prefer standard to -1/64″ sizing to enhance distal control. **Customization should be iterative**-initial sizing,on-course validation,and fine-tuning based on launch monitor feedback and subjective grip comfort.

Implementation protocols for research or fitting sessions should be systematic and reproducible. Recommended procedural elements include:

- Baseline anthropometric capture (hand span, palm width, finger lengths)

- Static and dynamic pressure mapping during 10-20 swing cycles

- Material transmissibility testing using standardized vibration input

- Objective performance linkage via ball-flight metrics (dispersion, spin, launch)

These steps enable correlational analysis between interface mechanics and measurable performance outcomes.

| Golfer Profile | Suggested Diameter Adjustment | Rationale |

|---|---|---|

| Small hands / limited wrist hinge | +1/32″ to +1/16″ | Reduce grip tension; improve leverage |

| Average hands / balanced release | Standard | Preserve proprioceptive feedback |

| Large hands / aggressive release | -1/64″ to 0 | Enhance distal control; reduce bulk |

Integrating pressure distribution, tactile fidelity, and prescriptive sizing into the fitting workflow produces equipment selections that are both biomechanically consonant and empirically linked to improved consistency and shot-shaping capacity.

Ball Club Interaction: Coefficient of Restitution, Spin Generation and Launch Conditions with Practical Tuning Advice

A rigorous assessment of energy transfer begins with a quantitative understanding of the coefficient of restitution. COR governs the fraction of clubhead kinetic energy returned to the ball at impact and is a primary determinant of ball speed. Laboratory-style measurements correlate higher COR with increased carry, but face deflection, impact location and ball compression modulate the realized benefit in on‑course conditions. Where applicable, measurement should follow repeatable protocols (consistent swing speed, contact location and environmental control) to isolate COR effects from secondary variables.

Spin is generated by the interplay of frictional forces at the contact patch and the radius of the resulting relative motion between face and ball; both tangential velocity and effective loft at impact are decisive. Grooves, surface roughness and ball cover construction alter frictional behavior and thereby spin coefficient across clubs. The table below summarizes practical target windows used in empirical fitting to balance distance and control for representative clubs:

| Club | Target Launch (deg) | Target Spin (rpm) |

|---|---|---|

| Driver | 10-13 | 1800-3000 |

| 7‑Iron | 28-34 | 6000-9000 |

| Pitching Wedge | 40-48 | 9000-12000 |

Launch conditions are a composite outcome of attack angle, dynamic loft and clubface orientation at the instant of contact; the clubhead’s center of gravity location and shaft behavior during the downswing shift those variables systematically. Negative attack angles tend to elevate spin for irons while positive attack angles reduce driver spin if dynamic loft is controlled. Accurate tuning requires synchronous measurement of attack angle, face-to-path, and dynamic loft using a calibrated launch monitor to avoid misleading single‑metric optimizations.

practical tuning follows an iterative, evidence‑based protocol:

- Map impact locations (impact tape/face mapping) and correct swing or lie angles if off‑center hits dominate.

- Adjust loft and spin through loft sleeves or wedge loft selection to place launch/spin inside target windows shown above.

- Modify shaft properties (flex, tip stiffness, length) to influence dynamic loft and face delivery timing.

- select ball compression and cover to align with clubhead speeds and spin needs.

- Refine surface interactions via regulated groove maintenance or legal face texturing where allowable.

These steps should be documented with pre/post metrics (ball speed, launch, spin, dispersion) so that trade‑offs between distance and control are explicit and reproducible while ensuring conformity with governing‑body limits.

Measurement Systems and data Analysis: High Speed Imaging, Launch Monitors and Statistical Methods for Reliable comparisons

High-speed imaging provides the temporal resolution necesary to resolve the rapid kinematics of club and ball interaction; typical systems operate at **1,000-10,000 frames per second** for impact analysis, with global-shutter sensors preferred to eliminate motion distortion. critical considerations include sensor sensitivity, lens focal length (to minimize parallax), and lighting (high-intensity pulsed illumination to freeze motion without motion blur). To ensure usable kinematic output, image-to-world calibration routines must be documented, with explicit reporting of calibration residuals and the assumed coordinate system origin used for clubhead and ball centring.

Contemporary launch monitors fall into two broad categories-**Doppler radar** and **photometric/optical** systems-with each presenting distinct error structures: radar systems excel at ball-velocity measurements at longer distances while photometric systems typically deliver more accurate clubface and spin measurements near the impact zone. System validation requires comparison against traceable standards (e.g., calibrated velocity references, precision rotary tables for spin) and repeated measures to quantify bias and precision. Environmental factors such as temperature, humidity, and background surface reflectance must be logged because they differentially affect radar return and optical contrast.

Robust experimental design underpins reliable equipment comparisons. Key procedural controls include:

- Randomization of club order and ball batches to mitigate temporal trends;

- Blocking by player or swing template when using human subjects to account for inter-swing variability;

- Replication sufficient to estimate within-condition variance (minimums should be justified via power analysis).

Additionally, use of mechanical ball launchers or robotic arms should be described with their repeatability metrics; when human participants are employed, report instructor calibration procedures and inclusion/exclusion criteria.

Statistical analysis must move beyond simple mean comparisons. Recommended approaches include **linear mixed-effects models** to partition fixed effects (equipment type) and random effects (player, trial), intraclass correlation coefficients (ICC) for repeatability assessment, and bootstrapped confidence intervals for non-Gaussian outcomes. Report effect sizes (Cohen’s d or standardized differences) and minimum detectable differences given the observed variance. For multiple metric evaluations (e.g., carry distance, spin rate, launch angle), apply multivariate techniques or false-discovery-rate corrections to control type I error while retaining interpretability.

Data processing and reporting standards complete the evaluation pipeline: clearly state filtering algorithms,time-synchronization methods (especially when combining high-speed video with launch-monitor data),and criteria for outlier exclusion.The following table summarizes common measurement systems and typical precision expectations used in peer-reviewed equipment testing:

| System | Primary Metrics | Typical Precision |

|---|---|---|

| Doppler Radar | Ball speed,carry | ±0.2-0.6 mph |

| Photometric/Optical | Spin, launch angle, clubface | Spin ±50-150 rpm |

| High-Speed Camera | Club kinematics, impact deformation | Temporal ±0.01-0.001 ms |

provide raw data access or supplementary files where possible, and include a concise methods appendix so readers can reproduce calibration, processing, and statistical workflows-this transparency is essential for credible, comparative evaluation of golf equipment performance.

User Centric Performance Metrics: Translating Laboratory Results into on Course Outcomes and Customized Fitting protocols

Laboratory-derived metrics provide precise,repeatable measurements of club and ball behavior,but their practical value depends on rigorous translation into measurable on-course improvements. Evaluators must prioritize **ecological validity** by quantifying how controlled launch monitor outputs correlate with real-world shot outcomes across diverse playing conditions. Empirical mapping between laboratory data and on-course performance reduces the risk of overfitting equipment choices to idealized swings and rather emphasizes reproducible benefits for the end user.

Robust protocols integrate repeated-measures testing, mixed-effects statistical models, and targeted on-course validation rounds to account for **inter-subject variability** and situational factors (wind, lie, pressure). Calibration steps should include baseline profiling,randomized equipment trials,and split-sample validation so that observed laboratory gains achieve statistical significance while remaining meaningful to the individual player. This hybrid methodology ensures decisions are both data-driven and player-centered.

Practical evaluation centers on a compact set of user-centric metrics that bridge lab precision and course realities.Key metrics include:

- Launch and Spin Profiles – distribution of launch angle and spin rates under typical swing variability.

- Dispersion Consistency – lateral and carry standard deviations across repeated shots.

- playability Indicators – propensity for playable lies,interaction with turf,and shot-shaping capacity.

- Subjective Fit Measures – comfort, confidence, and perceived feedback under pressure scenarios.

To facilitate rapid interpretation,simple mapping tables translate laboratory outputs into fitting actions and expected on-course consequences. The table below is formatted for WordPress display and is intentionally concise to support fitting workflows:

| Lab Metric | On-Course Implication | Fitting Adjustment |

|---|---|---|

| high Side Spin | Shot ballooning, reduced roll | Loft reduction / lower-spin shaft |

| Wide Dispersion SD | Lost fairways; shorter scoring opportunities | Stiffer shaft / tighter lie or clubhead design |

| Low Ball Speed | Distance deficit on long holes | Optimize launch (loft/shaft) / increase COR |

Customized fitting protocols should be governed by explicit **decision thresholds** and an iterative testing cadence: set target ranges for strokes-gained relevant to the player’s level, prioritize the smallest detectable improvement that yields a meaningful on-course advantage, and iterate equipment changes with confirmatory on-course testing. Emphasizing predictive validity, player preferences, and measurable shot dispersion ensures fittings translate into sustainable performance gains rather than transient laboratory anomalies.

Recommendations for manufacturers and Coaches: Design Trade offs,Testing Standards and Evidence Based Specification Guidelines

Manufacturers must navigate explicit design trade-offs that influence on-course performance and player adaptation. Prioritizing distance often requires concessions in spin control and feel; similarly, maximizing forgiveness can reduce workability for skilled players. An explicit, evidence-driven conversation around these trade-offs-grounded in quantified metrics rather than marketing descriptors-enables rational product positioning and clearer coach‑player communication.

- Distance vs.Control: higher COR and lower spin may increase carry but reduce shot-shape predictability.

- Forgiveness vs. Workability: perimeter weighting aids mis-hits but dampens shot-shaping capability.

- Weight Savings vs. Durability: composite or thin-face solutions improve mass distribution but can shorten lifecycle under tournament use.

Testing protocols must be standardized and reproducible to support meaningful comparisons across models and brands. Recommended practices include controlled environmental conditions (temperature, humidity, wind-free spaces), agreed launch‑monitor calibration procedures, minimum sample sizes stratified by player skill tier, and predefined statistical significance thresholds for performance claims. reports should present mean values, standard deviation, and confidence intervals for each metric to communicate both central tendency and variability.

| Metric | Recommended Condition | Acceptance Threshold |

|---|---|---|

| Ball Speed | Indoor, calibrated launch monitor | Δ ≤ 0.5% between devices |

| Spin Rate | Standardized ball model & loft | CI 95% reported |

| Shot Dispersion | Minimum 30 impacts per configuration | SD & directional bias documented |

Specification guidelines should be evidence‑based and player-centered: categorize target cohorts (e.g.,recreational,competitive amateur,elite) and tailor tolerances accordingly.Require manufacturers to publish test protocols, raw summary statistics, and criteria used for selecting test subjects. Emphasize reproducibility by mandating third‑party or independent replication of key performance claims before market release.

Coaches play a pivotal role in translating equipment data into actionable prescription. Develop coach-facing output that links measurable equipment differences to observable swing outcomes and practice progressions. Practical steps include establishing a shared vocabulary with manufacturers,integrating controlled on-course trials into fitting processes,and using routine verification drills to confirm that nominal lab gains manifest under play. For operationalization, adopt an evidence checklist that includes:

- Independent verification of manufacturer claims

- player-specific trial blocks (minimum 18 holes or equivalent shot sample)

- Documentation of environmental and physiological variables during testing

- Periodic re-evaluation aligned with equipment wear and swing changes

Q&A

Note: the web search results supplied with your request were unrelated to golf equipment testing (they link to Reddit pages). The Q&A below is prepared using standard methods in biomechanics, launch-monitor testing, and quantitative experimental design, and is tailored to the topic “Rigorous Evaluation of Golf Equipment Performance.”

Q1: What is meant by “rigorous evaluation” of golf equipment performance?

A1: Rigorous evaluation denotes an objective, reproducible, and obvious assessment that uses validated measurement instruments (e.g., launch monitors, motion capture), well-controlled experimental protocols, appropriate statistical modelling, and clear reporting of uncertainty and effect sizes. It minimizes bias through careful control of confounders (surroundings, ball model, player state), standardizes test procedures, and assesses both statistical and practical significance.

Q2: Which primary outcome metrics should be collected when testing clubs and balls?

A2: Core ball-flight and impact metrics include clubhead speed, ball speed, smash factor, launch angle, spin rate (total and side), spin axis, carry and total distance, apex height, and lateral dispersion (shot dispersion and deviation). For clubs, additional metrics include center-of-pressure/impact location and clubface angle at impact. For player- or equipment-focused biomechanics, record kinematics (joint angles, segment velocities) and kinetics (ground reaction forces).

Q3: What instruments are recommended and what are their minimum requirements?

A3: Recommended instruments include radar- or camera-based launch monitors (commercial systems validated in the literature), high-speed video or optical motion-capture systems for club and body kinematics, and force platforms if ground kinetics are of interest. Minimum requirements: temporal resolution sufficient to resolve impact dynamics (motion capture typically ≥200 Hz; high-speed cameras 1,000+ fps for impact detail), validated accuracy for ball speed/launch parameters, and routine calibration against known standards.

Q4: How should the testing environment be controlled?

A4: Control ambient conditions that affect ball flight: temperature,air pressure,humidity,and wind. Use indoor testing facilities or outdoor days with negligible wind. Use a consistent ball model and ensure consistent ball condition (new or standardized number of shots). Calibrate instruments at the start of each session and allow equipment and participants time to acclimate.

Q5: what is an appropriate experimental design for comparing equipment (e.g., shafts, heads, balls)?

A5: Use within-subject repeated-measures designs when possible to control inter-player variability. Randomize and counterbalance the order of conditions to prevent order and fatigue effects.Block by player skill level if heterogeneity exists. If between-subject designs are required, increase sample size and match participants on key covariates (e.g., handicap, clubhead speed).

Q6: How many trials and participants are typically required?

A6: There is no worldwide number; required sample sizes depend on expected effect sizes and variability. For within-subject comparisons,collect multiple repeated swings per condition (commonly 15-30 valid shots) to estimate a participant mean and within-subject variance. For group-level inference, perform an a priori power analysis using pilot variability to determine participant count; many applied studies use 15-30 players per group for moderate effects, but larger samples are needed for small effects or broad generalization.

Q7: How should shot-level variability and outliers be handled?

A7: Define objective inclusion criteria before testing (e.g., exclude mis-hits based on impact location or greatly aberrant launch/ball-speed values). Report the number and reasons for excluded trials. Use robust estimation (median or trimmed means) or mixed-effects models that incorporate within-player variability. Present both raw scatter and summary statistics (means, SD, coefficient of variation).Q8: What statistical models are most appropriate?

A8: Linear mixed-effects models are preferred for repeated-measures designs because they model within-player correlation and allow random intercepts/slopes.For complex outcomes (e.g., dispersion patterns), use multivariate or spatial models. Report effect sizes (mean differences with 95% confidence intervals), model assumptions, and, where relevant, adjust for multiple comparisons. Bayesian models are useful for incorporating prior information and providing probabilistic effect statements.

Q9: How should practical significance be addressed versus statistical significance?

A9: Present effect sizes in meaningful units (yards, mph, degrees) and contextualize against real-world thresholds (e.g., distance gained needed to change club choice or tournament outcomes). Report the minimal detectable or minimally important difference based on measurement error and player variability. Avoid over-emphasizing p-values; emphasize confidence intervals and the likelihood that observed differences are meaningful to players.

Q10: How to quantify and report measurement error and repeatability?

A10: Report instrument precision and accuracy, intra-class correlation coefficients (ICC), coefficient of variation (CV), and standard error of measurement (SEM). Use repeated calibrations and repeated-measures within sessions to estimate repeatability. Provide separate estimates of within-session (short-term) and between-session (long-term) variability.

Q11: How to account for confounding factors such as ball model, loft/lie, and grip?

A11: Standardize equipment parameters whenever the goal is to isolate a single variable. When testing multiple equipment designs, control or record loft, lie, shaft length and flex, grip size, and head weight. If these covariates vary, include them analytically in multivariable models or restrict comparisons to matched specifications.Q12: What role does biomechanical data (motion capture, EMG) play in equipment evaluation?

A12: Biomechanical measures explain the player-equipment interaction mechanism-how equipment changes alter swing kinematics, loading, or timing. They are essential when equipment changes have small ball-flight effects but modify player movement patterns or injury risk. Careful synchronization of biomechanical and ball-flight data is necessary.

Q13: How should shot dispersion and accuracy be quantified?

A13: Use spatial metrics: mean lateral deviation, standard deviation of lateral and longitudinal distances, group dispersion area (e.g.,bivariate ellipse),and miss distributions relative to a target line. For scoring relevance, convert dispersion into likelihood of hitting fairway/green for given hole geometry and conditions.

Q14: How do environmental conditions and altitude affect testing results?

A14: Temperature, air density, and altitude alter ball carry for the same launch conditions. Report environmental conditions and, where relevant, model air-density effects on distance. For comparisons, either normalize to standard atmospheric conditions (using ball-flight physics models) or perform all tests under the same controlled environment.

Q15: What ethical and safety considerations apply to human participant testing?

A15: Obtain informed consent, ensure participant fitness for repeated maximal-effort swings, provide protective screens and safe facility layout, and follow institutional review board (IRB) protocols if research involves identifiable data or interventions. Allow rest to mitigate fatigue and limit injury risk.

Q16: how to report methods and results to ensure reproducibility?

A16: Provide full details: participant demographics and skill level, number of trials and exclusion criteria, equipment specifications (club model, loft, shaft), ball model and condition, launch-monitor make/model and calibration procedures, environmental conditions, statistical models and software, and raw or summary data (when possible).Include effect estimates with uncertainty.

Q17: How to translate lab testing to on-course performance?

A17: Laboratory metrics (carry,dispersion,spin) frequently enough predict on-course outcomes but must be contextualized by course architecture,lie variability,wind,and strategic choice. Use modeling or simulations to estimate strokes-gained impacts, and where possible validate lab findings with field trials under representative play.

Q18: What are common pitfalls to avoid?

A18: Common pitfalls include small sample sizes, inadequate randomization, failure to control for ball or environmental variability, over-reliance on p-values, not reporting measurement uncertainty, and conflating statistical significance with practical relevance. Also avoid using unvalidated instruments or failing to account for player learning/fatigue.

Q19: How should manufacturers and fitters use rigorous evaluation results?

A19: Use standardized protocols and transparent reporting to inform product claims, ensure fitting recommendations are based on measurable performance differences relevant to the individual (e.g., shaft flex for swing speed ranges), and provide clients with both statistical and practical interpretation of differences.

Q20: What are emerging directions for rigorous equipment evaluation?

A20: Advances include higher-fidelity sensor fusion (combining IMUs, high-speed video, and radar), machine-learning models that predict on-course performance from lab metrics, individualized equipment optimization using player-specific performance envelopes, and open-data repositories for meta-analytic synthesis.Greater emphasis on ecological validity and linking lab metrics to strokes-gained or other performance outcomes is expected.

If you would like, I can: (a) convert these Q&A items into a structured FAQ for publication, (b) provide a sample experimental protocol (step-by-step), or (c) recommend statistical code templates (mixed-effects models) for common comparisons. Which would you prefer?

Wrapping up

a rigorous, multidisciplinary approach to evaluating golf equipment-encompassing precise quantification of clubhead geometry, dynamic characterization of shafts, and systematic assessment of grip ergonomics-provides the empirical foundation necessary to move beyond anecdote and marketing claims. Controlled laboratory experiments, validated simulation, and ecologically valid on-course trials together enable robust estimation of how design parameters influence key outcome metrics (ball speed, launch conditions, dispersion, feel, and injury risk) while accounting for inter-player variability and environmental factors.

The implications of such evaluations extend across stakeholders. Manufacturers can use reproducible test protocols and transparent reporting to optimize designs and communicate trade-offs; coaches and fitters can make evidence-based recommendations tailored to player biomechanics and skill levels; and standards bodies can refine conformity criteria that preserve competitive fairness without stifling innovation. Equally critically important are methodological considerations: rigorous statistical analysis, properly powered experiments, and open sharing of data and methods are essential to ensure results are interpretable and generalizable.

Limitations inherent to any evaluation-differences between lab and field performance, the challenge of capturing subjective constructs such as feel, and the need to balance population-level findings against individual customization-must be acknowledged. Future research should prioritize longitudinal and in situ studies, integrate advanced sensing (e.g., high-speed stereophotogrammetry, IMUs, pressure mapping) with computational models (FEA, multibody dynamics, fluid-structure interaction), and explore data-driven personalization using machine learning while maintaining transparency and physical interpretability.

Ultimately,the pursuit of rigor in golf equipment evaluation is not an end in itself but a means to improve player performance,reduce injury risk,and guide responsible innovation. By embracing standardized methods, multidisciplinary collaboration, and open science practices, the field can deliver actionable, trustworthy knowledge that benefits players, practitioners, manufacturers, and the broader scientific community.

Rigorous Evaluation of Golf Equipment Performance

Why a Rigorous Approach Matters for Golf Equipment

Golf performance is the product of player skill plus the right golf equipment. A rigorous evaluation process-built around launch monitor data, controlled lab tests and on-course validation-reveals which golf clubs, golf balls and accessories genuinely improve carry distance, consistency and forgiveness. This structured testing reduces guesswork and helps golfers make evidence-based decisions at club fitting or when buying new equipment.

Core Metrics to Measure (and why They Matter)

- Clubhead speed – baseline for potential ball speed and distance.

- Ball speed – the immediate driver of distance; used to calculate smash factor.

- Launch angle – affects carry and total distance; diffrent lofts/shaft combos change optimal launch.

- Spin rate – high driver spin reduces roll; short game spin boosts stopping power.

- smash factor - ball speed divided by clubhead speed; indicates energy transfer efficiency.

- Clubface (face angle) and spin axis – determine ball flight shape and curvature.

- MOI & CG (moment of Inertia & Center of Gravity) - affect forgiveness and launch window.

- Turf interaction – notably for irons and wedges; affects spin and distance control.

- Consistency / dispersion – grouped where the ball lands; critical for scoring and course management.

Laboratory vs. On-course Testing: A Dual Approach

Controlled Lab Testing (Launch Monitor + High-Speed Capture)

Use a high-quality launch monitor (TrackMan, GCQuad, FlightScope) and high-speed cameras in a climate-controlled hitting bay to remove environmental variability. Laboratory testing lets you isolate variables like loft,shaft flex,clubhead,and ball type.

- Run 20-30 shots per configuration for statistical reliability.

- Record mean and standard deviation for each metric.

- Test multiple golf balls across the same club settings to isolate ball effects like compression and spin.

On-Course Validation

Translate lab gains into real performance by testing on the course. Turf interaction, shot creativity, and wind conditions are only measurable in the field.

- Play a representative sample of holes (drivable par-4s, long par-5s, approaches to elevated greens).

- Track scoring, GIR (greens in regulation), and proximity to hole using GPS or rangefinder logs.

- Compare dispersion and forgiveness with real-course lies and varying shot shapes.

Standardized testing Protocol: Step-by-Step

- Baseline Set: Measure current clubs and ball performance (10-20 shots) to create a baseline.

- Control the Swing: If possible, use a consistent human tester (same warm-up, same swing focus) or a golf robot for absolute repeatability.

- Change One Variable at a Time: Test shaft, loft, ball model, or clubhead one at a time to isolate effects.

- Collect Enough Data: 20+ shots per condition is a good balance between time and statistical meaning for human testing. Robot testing can use 50-200 shots for tighter confidence intervals.

- Analyze: Look at mean, variance, and outlier behavior. Consider t-tests when comparing two setups.

- Validate on Course: Use 9-18 hole testing to confirm lab-predicted advantages.

Tools and Tech for Rigorous Evaluation

- Launch monitors: TrackMan, GCQuad, flightscope - measure carry, spin, launch, ball/club speed.

- High-speed cameras and impact tape – visualize contact location on the clubface.

- pressure mats and force plates – evaluate balance and swing weight distribution.

- Golf robots (when available) – provide repeatability for pure equipment comparisons.

- Shot tracking apps & GPS loggers – capture on-course results and dispersion.

How to Structure a Club-Fitting Session for Reliable Results

Club fitting is where equipment meets the player. Make the session methodical:

- Pre-fit questionnaire: swing speed, typical ball flight, common misses, preferred feel.

- Static measurements: hand size, lie angle, wrist-to-floor, posture.

- Dynamic testing: launch monitor session with incremental changes in loft, shaft flex, and length.

- Ball testing: try at least 3 ball models (low-spin, mid-spin, high-spin) to see driver-to-wedge tradeoffs.

- On-course mini-validation: hit a few approach shots into a target green and count successful shots.

Ball testing: Distance vs. Short-Game Control

Golf balls introduce trade-offs. A low-compression, low-spin ball may add driver distance but reduce wedge spin. Rigorous ball testing follows the same protocol:

- Test with the same club, same launch monitor session.

- Measure driver ball speed, launch, spin, and carry.

- Measure wedge spin and stopping distance on a consistent green.

| Golfer Type | Driver Target Launch | Driver Spin (RPM) | Recommended Focus |

|---|---|---|---|

| Beginner / High Handicap | 10-12° | 2,800-3,200 | Forgiveness & launch |

| Mid Handicaps | 11-13° | 2,400-2,800 | Balance spin & distance |

| Low handicap / Better | 10-12° | 1,800-2,400 | Workability & low spin |

Analyzing Results: What to Look For

- Meaningful distance gains: look for consistent increases in carry and total distance without sacrificing dispersion.

- Smash factor improvements: an increase suggests better energy transfer-frequently enough due to improved contact or face technology.

- Spin window: A wider “launch + spin” window indicates more forgiveness across strike locations.

- Spin consistency for wedges: Higher and more consistent spin is preferable for approach stopping power.

- Variance reduction: Lower standard deviation across shots typically translates to better on-course scoring.

Practical Tips to improve Test Reliability

- Warm-up consistently before any test to reduce swing variability.

- Use the same tee height, ball alignment and stance for all driver shots.

- Record environmental factors (temperature, altitude, humidity) – altitude increases carry; cold reduces ball speed.

- Rotate the shot order to avoid fatigue bias (e.g., alternate clubs if testing multiple clubs).

- Log every shot with notes: mishit, turf condition, wind gusts, target focus.

Case study: Fitting a Mid-Handicap Golfer (Realistic Example)

Scenario: A 12-handicap player with 95 mph driver speed wanted more carry and tighter dispersion.

- Baseline (10 shots): Average clubhead speed 95 mph, ball speed 135 mph, carry 235 yards, average spin 3,100 rpm.

- Intervention: Tested three shaft flexes (regular, stiff, hybrid) and two driver heads with higher MOI.

- best setup (stiff shaft + high MOI head): Avg clubhead speed 96 mph, ball speed 137 mph, carry 245 yards, spin 2,800 rpm, dispersion reduced by 12%.

- On-course validation (9 holes): Scoring improved by two shots compared to baseline round; fairways hit increased.

lesson: A structured fitting that targets launch angle and reduces excess spin produced measurable and repeatable improvements.

First-Hand experience: Common Outcomes from Rigorous Testing

From working with players and fitters, consistent themes emerge:

- Many golfers gain 5-15 yards of carry when launch and spin are optimized, not just when buying the newest driver.

- Shaft selection often matters more than driver head shape for dispersion and feel.

- Switching ball models can flip performance priorities: distance-first balls frequently enough reduce wedge spin.

- Robust testing often reveals that perceived improvements from new gear are sometimes placebo-data separates feeling from fact.

Common Pitfalls to Avoid

- Relying on a single shot or a small sample – outliers can mislead your decision.

- Testing different balls between clubs - always control the ball when isolating a club variable.

- Ignoring feel and confidence – a marginal data gain that undermines confidence on-course may backfire.

- Not validating on-course – lab numbers are necessary but insufficient for scoring betterment.

Rapid Checklist for a Rigorous Equipment Test

- Same tester, consistent warm-up

- Controlled surroundings (or logged conditions)

- High-quality launch monitor and impact diagnostics

- 20+ shots per configuration (or more if possible)

- One variable changed at a time

- Statistical summary: mean, SD, and clear winner

- On-course validation rounds

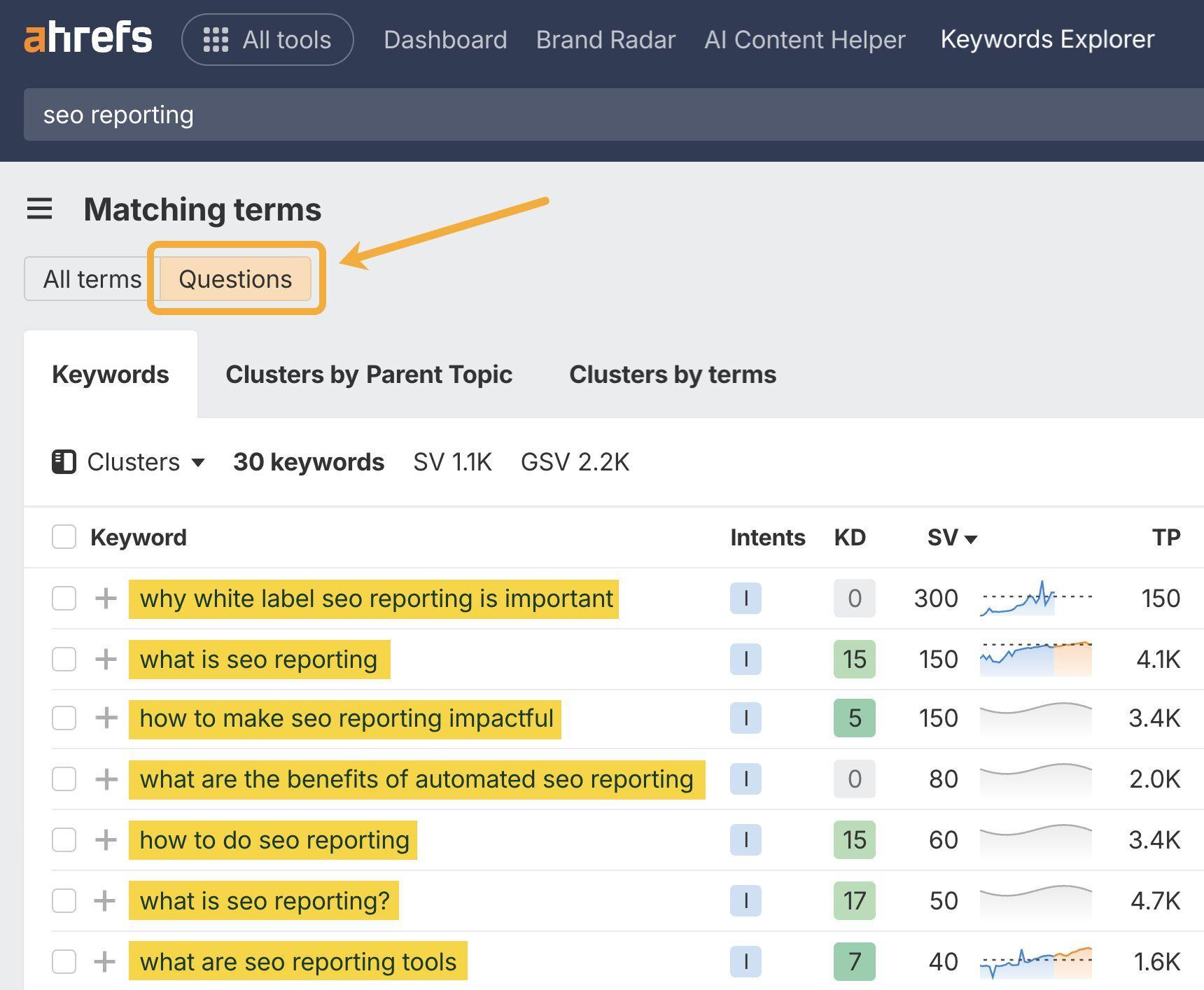

SEO-Focused Content Notes (for editors)

This article naturally includes primary golf keywords such as “golf equipment,” “golf clubs,” “golf balls,” “launch monitor,” “club fitting,” “swing speed,” “spin rate,” “launch angle,” and “forgiveness.” To improve search visibility further:

- Use descriptive alt text for images (e.g., “launch monitor measuring club and ball metrics”).

- Create internal links to pages on club fitting services, launch monitor reviews, and ball performance tests.

- Add an FAQ block addressing common buyer questions (e.g., “How many shots should I test?” “Which launch monitor is best?”).

- Keep headings keyword-rich and use schema for product and review markup where applicable.

Suggested FAQ Snippets

- How many shots should I hit when testing a driver? Aim for 20+ shots to balance time and statistical confidence.

- Should I use a robot or a human for testing? Robots offer repeatability; humans provide real-world relevance-combine both if you can.

- Which metric matters most for distance? Ball speed and launch angle are primary drivers; spin rate and smash factor refine results.

If you want, I can convert this into a ready-to-publish WordPress post with SEO meta tags, FAQ schema, and an editable table of recommended fittings tailored to a specific swing speed range. Want that?